Area of Research: Technology & Human Environment

Elektron: Electrical Systems in Retrospect and Prospect

And I saw something like the color of amber,

Ezekiel 1:27 (circa 595 b.c.)

like the appearance of fire round about enclosing it;

from what appeared to be his loins upward,

and from what appeared to be his loins downward,

I saw what appeared to be fire,

and there was a brightness round about him.

In the ancient world, electrum (Hebrew) or elektron (Greek) was the material amber. Amber, when rubbed and electrified, preferably with cat fur, moved and lifted dust specks and small objects. The Greeks first identified electricity by its g odlike capacity for action at a distance. This capacity and its control have been and will continue to be the trump cards in the invention and diffusion of electric machinery.

While its power and magic are old, electricity as an applied technology is young, with a history of barely more than a century. Two thousand five hundred years passed between Ezekiel and Thomas Edison. Today the electrical system can place power in pre cise positions in space with an immense range of capacity, from nanowatts to gigawatts. This spatial fingering is made possible by electrical conductors that are immersed in insulating space or solids. The conductors, which are basically metals, are impen etrable to electric fields and can modify and draw them into long thin threads reaching an office, home, or the memory cell in a computer chip.

Electromagnetic waves, as well as wires, transport electrical energy into space. Microwave guides and optical fibers resemble wires fingering into space. Efficient interfaces between the two modes of transport have developed, greatly extending the pano ply of gadgets that transform electricity into useful actions.

Electrical technology is one of the few technologies that emerged straight from science and organized research. The lexicon of electricity-ohms, amperes, galvanometers, hertz, volts-is a gallery of great scientists of the eighteenth and nineteenth cent uries. Applications of electricity were the subject of the first systematic industrial research laboratory, established in 1876 by Edison in Menlo Park, New Jersey. There, Edison and his colleagues made the phonograph in 1877, a carbon-filament incandesce nt lamp in 1879, and myriad other inventions.

The earliest attempts to apply electricity came from laboratories studying electrostatic phenomena. Medicine, always curious to test new phenomena in the human body that promised healing or strength, led the way. Many claims sprang from the spark, shoc k, and sizzle of electrostatic phenomena. Eighteenth-century scientists reported that electric charges made plants grow faster and that electric eels cured gout. They sent electrical charges through chains of patients to conquer disease and, as among the clientele of Dr. James Graham’s fertility bed in London, to create life. C. J. M. Barbaroux, later a leader of the Girondist faction in the French Revolution, enthused in 1784:

O feu subtil, âme du monde,

Bienfaisante électricité

Tu remplis l’air, la terre, l’onde,

Le ciel et son immensité.1

Electricity brought to life the subject of Dr. Frankenstein’s experiments in Mary Shelley’s famous novel, published in 1818. An application of electricity also vitalized the ancient Egyptian in Edgar Allan Poe’s 1845 story “Some Words with a Mummy.”2 Upon awakening, the mummy observes to the Americans gathered round him, “I perceive you are yet in the infancy of Galvanism.” Later in the nineteenth century the Swedish playwright August Strindberg wrapped himself in current s to elevate his moods and even gave up writing to pursue electrical research until he badly burned his hands in an ill-planned experiment.

Popular imagery notwithstanding, the high-voltage, low-current electrostatic phenomena were at the core of electric research until only about 1800, when Alessandro Volta announced his invention of the battery. Volta introduced the more subtle low-volta ge, high-current game of electrodynamics. Twenty-five years linked the flow of electric currents to the force of electric magnets. Another twenty-five years bound the two productively into the electric dynamo and motor.

Among the key figures in the electromechanical game was an American, Joseph Henry, who, with the Englishman Michael Faraday, contributed a series of discoveries leading to practical electric generators. Tracing a bright path back to Benjamin Franklin, electricity was one of the first fields of research in which the United States assumed a leading role, and one of the first technologies to diffuse earliest in America. As we shall see, once the interface between mechanical and electrical power had been i nvented, the niche for expansion proved immense.3

Power for the Workshop

Since the Middle Ages, water wheels had provided the primary drive for grinding grain, fulling cloth, working metal, and sawing wood. But mechanical power drawn from water or wind did not permit action at a distance, except through even more mechanical devices. These could become sophisticated and baroque. For example, a cable system spread 1 megawatt of mechanical power from the falls of Schaffhausen, Switzerland, to the industrial barracks around them. The mechanically drawn San Francisco cable cars continue to delight visitors but only travel a distance of one or two kilometers.

Powered by water, workshops had to be riparian. “Zavod,” the Russian word for a plant, literally means “by the water.” Ultimately, steam detached power from place. Over a period of decades, steam engines overtook water wheels. In America, steam needed one hundred years to supersede water. Though we recall the nineteenth century as the age of steam, water did not yield first place until 1870. The primacy of steam in America would then last just fifty years (Figure 1).

Figure 1. Sources of Power for Mechanical Drives in the United States.Note: The upper panel shows the absolute horsepower delivered by each type and their sum. The lower panel shows the fraction (F) of the total horsepower provided by each type, according to a logistic substitution model.Data Source: Warren D. Devine, Jr., “From Shafts to Wires: Historical Perspective on Electrification,” Journal of Economic History 43 (1983): 347_372; Table 3, p. 351.

At first, steam preserved the layout of the factory. It simply provided more flexible and dependable mechanical energy. The small early steam engines usually operated individual devices. A leap forward came with the advent of the single, efficient, cen tral steam station to serve all the machinery inside a plant. Pulleys rotating above the heads of the workers provided power for their diverse machines via vibrating and clapping belts. But the network of beams, blocks, cords, and drums for transmitting t he steam power to the machinery on the floor encumbered, endangered, and clamored.

The electric motor drive, which emerged around 1890, revolutionized the layout of the factory. The first era of electrical systems commenced. The steam engine now ran an electric generator that penetrated the factory with relatively inconspicuous coppe r wires carrying electricity, which in turn produced mechanical energy at the point of consumption with an electric motor. Here was the seed of modern manufacturing. The electric motor drive permitted the factory machines to be moved along the production sequence, rather than the reverse.

One might suppose that the superior electric transmission, with a generator at one end and motors at each machine, would quickly supplant the old mechanical system. In fact, as Figure 1 shows, the process required fifty years. Resis tance was more mental than economic or technical. In 1905 the influential American historian and journalist Henry Adams chose the images of the Virgin and the dynamo around which to write his autobiography.4 The dynamo symbolized the dangerous, inhuman, and mindless acceleration of social change.

Power for the Region

By the time arcs and lamps emerged from Mr. Edison’s workshops, the generator could illuminate as well as grind, cut, and stamp. But the paradigm of the single generator for the single factory was soon superseded by the idea of a generator, or, better yet, a power plant, serving an entire community.

At first, electric companies were necessarily small. Technology for the transport of electricity particularly limited the scale of operations. The original Edison systems were based on low-voltage direct current (dc), which suffered drastic energy loss es over distance. Each piece of territory thus required its own company, and founding a new company meant filling a piece of territory or market niche.

Consider eastern Pennsylvania, a coal-and-steel region where some of the earliest Edison utilities began (Figure 2). Entrepreneurs swarmed the area to spread the successful innovation. About 125 power-and-light companies were establ ished between the middle 1880s and early 1920s, with 1897 being the year of peak corporate fertility. The rush to form companies was a cultural pulse, diffused by imitation.5

Figure 2. Founding and Consolidation of Electric Companies in the United States.Note: The main figure presents the two sets of data shown in the inset panel fitted to a linear transform of the logistic curve that normalizes each process to 100 percent, with estimates for the duration of the process, its midpoint, and saturation l evel indicated.Data Source: Pennsylvania Power and Light, Corporate History in nine volumes, Origin and Development of the Company, vol. 1, Allentown, Pa., 1940.

The evolution of technology to transport electricity, combined with the increase in the density of consumption (kW/km2), made higher transmission voltages economical and progressively coalesced companies. The key technology, first explored i n the 1880s by the inventor Nikola Tesla, was alternating current (ac), which could be raised in voltage through transformers and then transmitted long distances with low losses. The merger wave crested in 1914. By 1940 the resulting process left only Pen nsylvania Power and Light in operation.

When companies cover a geographical space, their natural tendency is to coalesce, like soap bubbles, especially if a technology permits the larger scale physically and encourages it economically. Several non-technical factors, including government and consumer fears about monopoly, can set limits on scale. Early in the century, Samuel Insull’s “electricity empire,” centered in Chicago, evoked public regulation, which became normal for the industry. Rapid growth and change usually elicit external regula tion. Still, the systems grow in the long run, as we shall see.

In the provision of electric power, the overriding independent variable is spatial energy consumption. Its increase leads to higher-capacity transport lines using higher voltage, making it possible to transport energy over longer distances with generators having higher power. This “higher and higher” game led the United States from the 10-kilowatt generator of Edison to the 1-gigawatt generators of today, one hundred thousand times larger.6

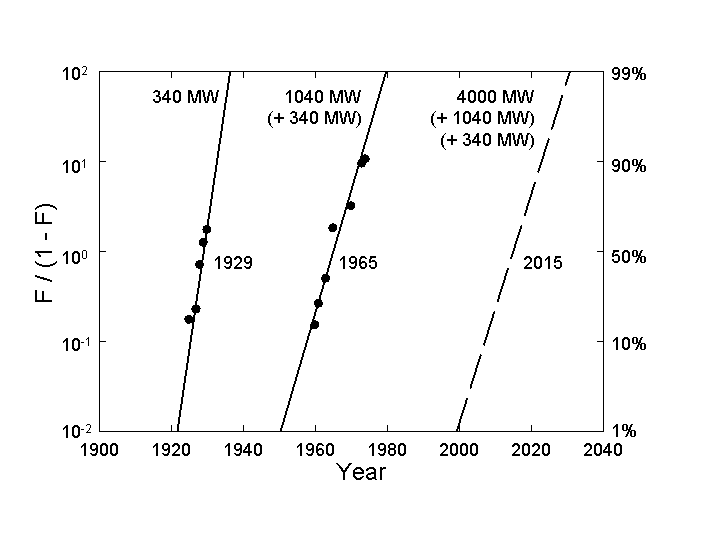

In fact, the expansion divides into two eras, as we see in Figure 3, which shows the evolution of the maximum line capacity of the US electric system. For the line-capacity indicator, we take over time the square of the highest volt age that is operational. Although various factors lower actual line capacity in practice, this indicator provides a consistent measure of power capacity for analysis of long-term trends.7 The maximum line capacity grows in two waves, one centered in 1921 and the second fifty-four years later in 1975.

Figure 3. Capacity of Top US Power Lines.Note: The units are kV2/1,000-a rough measure of power capacity. This figure as well as Figures 4, 6, and 8 show a two-phase process analyzed as a “bi-logistic” normalized with a l inear transform. In essence, one S-shaped growth curve surmounts another. The actual values are the sum of the two waves, once the second wave is underway. See Perrin S. Meyer, “Bi-logistic Growth,” Technological Forecasting and Social Change 47 (1 994): 89_102.Data Source: Edison Electric Institute, Washington, D.C.

We label the first wave “Steinmetz,” for Charles Proteus Steinmetz, the founding leader of the engineering department of the General Electric Company (GE) and a symbol of the fruitful interaction of mathematical physics and electrical technology.8 Following the pioneering work of Tesla, Steinmetz began investigating the problems of long-distance transmission and high-voltage discharges around 1905. The spectacular success of GE in subsequent decades testifies to the timel iness of Steinmetz’s innovations. New alternating-current systems and related gadgets made huge profits for GE and the other leading equipment supplier, Westinghouse, and incidentally killed many small-scale utilities, as in Pennsylvania.

The second pulse of growth in line voltage reaches a temporary ceiling at about 1.5 megavolts. Interestingly, the stretches of innovative activity, as measured by the interval to achieve 10 to 90 percent of the system development, cover only about half the time of electricity’s waves of growth. Two to three decades of rapid expansion are digested in a comparably long period of stability and consolidation, a frustrating cycle for engineers. Again the limit may not be technical or economic, but social. S ociety tailors the expanded system to fit its norms for safety and harmony. One constraint is available rights-of-way, which are very limited at present.

Because the area of the United States is constant and filled by the electrical network, total generating capacity approximates the spatial density of consumption. The growth in installed generating capacity also splits into two pulses, centered around 1923 and 1971 (Figure 4). At peak times operators experience the most rapid change and customers suspect the operators’ ability to handle it. During the second wave, annual growth in consumption peaked in the 1950s and 1960s at more th an 10 percent per year for many US utilities. The system in the Northeast blacked out one day in November 1965, prompting regional power pooling arrangements. To address concerns about the reliability of the entire network, the industry consorted to form the Electric Power Research Institute, which opened its doors in 1973 under the leadership of Chauncey Starr, for whom we name electricity’s second wave.9

Figure 4. Installed Electric Generating Capacity in the United States.Data Source: US Bureau of the Census, Historical Statistics of the United States (Washington, D.C.: US Bureau of the Census, 1978); and US Bureau of the Census, Statistical Abstract of the United States (Washington, D.C.: US Bureau of th e Census, 1978, 1981, 1984, 1986, 1989, 1991, 1992, 1994).

The current pulse of growth in US generating capacity reaches a ceiling around 765 gigawatts. The actual system growth has exceeded 90 percent of the niche, which in our view explains the recent slowdown in the building of power plants, nuclear or othe r, in the United States. The system anticipated the growth in demand that is tuned to economic development and technological diffusion, boxed into the long, roughly fifty-year economic cycles that have characterized the last two hundred years.10 At the end of the cycles, demand lags and overcapacity tends to appear.

Will the higher-and-higher game resume? In both line voltage and generating capacity, the growth in the second electrical wave exceeded the first by more than an order of magnitude. If the pattern repeats, the increase in electricity consumption will l ead to ultra-high voltage lines (for example, + 2 megavolts) with higher capacity (for example, 5 or 10 gigawatts) and continental range. The great advantage of continental and intercontinental connections is that standby reserves and peak capacity can be globalized. The worldwide load would be smoothed over the complete and immanent solar cycle. Generators could also become very large, with according economies of scale.

If the system evolves to continental scale, the much-discussed superconductivity at room temperature might not revolutionize transmission after all. Energy lost in transport and distribution is a stable 10 percent, a huge amount in absolute terms, but too small to change the basic economics if 2-megavolt lines cover the continents. Superconductivity could, however, bring about a revolutionary drop in the size of machinery, thereby permitting the construction of units of larger capacity.

Continental scale surely means increased international trade in electricity. All territory looks the same to electricity. If available technology is employed, electricity will stream across borders despite the political barriers that typically impede t he easy flow of goods and ideas. Today Europe exchanges electricity almost freely. Italy buys from France the equivalent production of six 1-gigawatt nuclear reactors either via direct high-voltage lines or through Switzerland. Electricity trade could for m a significant component of international payments over the next fifty to one hundred years, requiring reorganization and joint international ownership of the generating capacity. Electricity trade between Canada and the northeastern United States alread y elicits attention.

Utilization and Capacity

The utilization factor of generation plants counts heavily in the economy of the system and indicates the quality of its organization. The US electric industry searched successfully between 1910 and 1940 for efficient organization, notwithstanding the Great Crash of 1929, as the average annual utilization climbed from two thousand to above four thousand hours, a utilization rate of about 50 percent (Figure 5). The rise owed to spatial integration and the reduction of reserves conseq uent to the introduction of high-capacity transport lines with increasing operating voltage as well as the coordination of network dispatch to use plants more effectively.

Figure 5. The Rate of Utilization of US Electric Generating Plants.Data Source: US Bureau of the Census, Historical Statistics of the United States (Washington, D.C.: US Bureau of the Census, 1978); and US Bureau of the Census, Statistical Abstract of the United States (Washington, D.C.: US Bure au of the Census, 1978, 1981, 1984, 1986, 1989, 1991, 1992, 1994).

Since 1940 the system appears to have fluctuated around a utilization rate of 50 percent. Generators with low capital cost and high variable cost combine with base-loads plants with high capital cost and low variable cost to determine the current usage level. Although the utilization factor surely has a logical upper limit quite below 100 percent, even with high-voltage lines having continental reach, a 50-percent national average appears low, notwithstanding scorching August afternoons that demand ext ra peak capacity.

Breaking the 50-percent barrier must be a top priority for the next era of the industry. Otherwise, immense capital sits on its hands. One attractive way to make electric capital work around the clock would be to use plants at night. The mismatched tim ing of energy supply and demand existed when water power dominated. Pricing, automation, and other factors might encourage many power-consuming activities, such as electric steel-making, to go on the night shift. Nuclear heat, generating electricity by da y, could of course help to make hydrogen at night. The ability to store hydrogen would make the night shift productive.

The nearness of overcapacity in the electrical system also creates suspicion that forecasting within the sector has not been reliable. Analyses of projections of total electricity use made by the US Department of Energy and others fuel the suspicion. R eflecting a period when electricity consumption had doubled in spans of ten years, in 1978 federal officials projected an increase by 1990 from 2,124 terawatt hours to 4,142 terawatt hours.11 The actual level for 1990 was 2 ,807 terawatt hours.

Can we do better? Fitting the data for total utility electric use to our model with data through 1977 yields an estimated level of about 2,920 terawatt hours for the growth pulse now ending (Figure 6). Net generation in 1993 was 2,8 83 terawatt hours. Projecting electricity demand matters because it influences investments in capacity. Accurate projections might have lessened the pain for the utilities, which ordered and then canceled plants; the equipment suppliers, who lost the orde rs; and consumers, who ultimately pay for all the mistakes.

Figure 6. Total US Electric Consumption.Note: Here and in Figure 8 the empty circles indicate periods of overlap in the sequential growth waves. Assigning the exact values to each wave during the periods of overlap is somewhat arbitrary.Data Source: US Bureau of the Census, Historical Statistics of the United States (Washington, D.C.: US Bureau of the Census, 1978); and US Bureau of the Census, Statistical Abstract of the United States (Washington, D.C.: US Bureau of the Census, 1978, 1981, 1984, 1986, 1989, 1991, 1992, 1994).

Power for the Home

As suggested earlier, electricity is a spatial technology. Conquering a territory means connecting with potential users. We tend to think that almost everyone was connected soon after the first bulb was lit, but in fact the process extended gradually over fifty years and culminated even in the United States only in mid-century (Figure 7). Although slowed by the Great Depression, non-rural hookups reached 90 percent of the market by 1940. Rural areas joined the grid about one generat ion later than cities, reaching a midpoint of the process in 1943 versus 1920 for the townsfolk. This interval measures the clout of rural politicians, who secured subsidies for the costly extension of power lines to areas of low population density, as we ll as the conservatism of the countryside.

Figure 7. Percentage of US Homes with Electric Service.Data Source: US Bureau of the Census, Historical Statistics of the United States (Washington, D.C.: US Bureau of the Census, 1978).

The data further confirm that electricity’s first century has encompassed two eras. During the developmental spread of the system until about 1940, most electricity went for industry and light, substituting for other energy carriers in already existing market niches. In the second era, electricity powered new devices, many of which could not have performed without it, such as televisions and computers. Most of the new demand came in the residential and commercial sectors.

Average residential consumption has increased by a factor of ten since 1940 and appears in our analyses to saturate in the 1990s at about 10,000 kilowatt hours per year. One might say that the customer is the home, not the human. Home appliances have i ncreased by the tens and hundreds of millions: refrigerators, video-cassette recorders, vacuum cleaners, toasters and ovens, clothes washers and dryers, dishwashers, air conditioners, space heaters, and, more recently, personal computers, printers, and fa x machines.

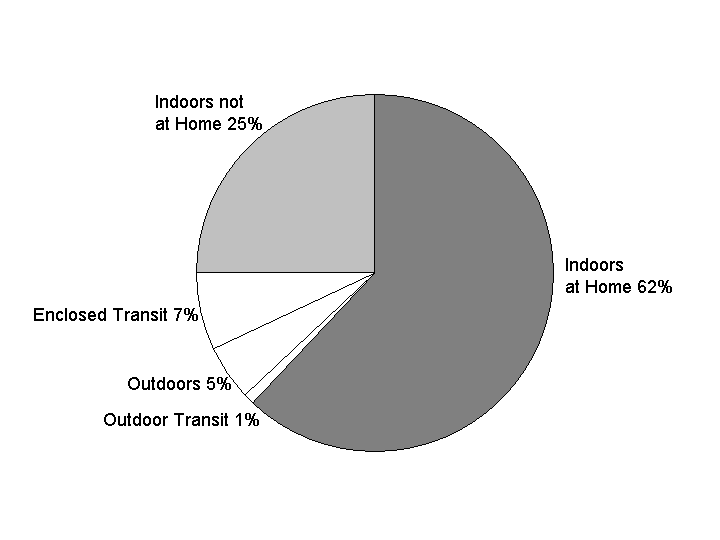

We emphasize the residential because it is becoming the number-one consumer. Residential consumption has grown faster than other major sectors over the past decades and in 1993 overtook industrial consumption in the United States. The number of housing units has grown sevenfold in the United States since 1900, while the number of people has tripled, as residents per unit have declined and second homes increased. 12 As the second wave of electrification reaches its culmina tion, the residential share appears destined to plateau at about 35 percent of the total use of electricity, more than twice its share of the first wave. In a third wave of electricity, residential consumption may grow only at the same rate as overall con sumption, or, if life-styles continue to include more home space and reduced working time, at an even faster rate.13 Californians already spend more than 60 percent of all their time at home indoors.14 So do New Yorkers and Indians.

Cleaning the Human Environment

In the absence of electricity, we heat, light, and power our homes and workplaces with wood, coal, kerosene, oil, manufactured city gas, and lesser fuels. Electrification has thus meant a cleaner, safer, and healthier environment at the level of the en d-user, once protections against shock and other hazards were properly wired into the system. Dangers associated with open fires and smoke diminished. Better-ventilated homes and workplaces lessened exposure to influenza, pneumonia, tuberculosis, diphther ia, measles, whooping cough, scarlet fever, and other airborne threats. Modern refrigeration in homes, shops, trucks, and railroad boxcars reduced the numerous waterborne gastrointestinal threats.

Environmentally, electricity concentrates pollution at a few points. At these points we can deal with the problems or not. The main question then becomes: What is the primary energy source for the generation? The most wanted environmental culprit is ca rbon, and so the main environmental challenge for electricity may be summarized by the measure of the carbon intensity of electricity production, for example, the ratio of carbon by weight to kilowatt hours generated.15 In the United States, this ratio fell by half between 1920 and 1940, from about 500 metric tons of carbon per gigawatt hour produced to about 250. Since the 1940s, the US ratio has fallen below only about 200 metric tons per gigawatt hour and has remained ra ther flat in recent decades because coal has gained markets in electric power plants, offsetting efficiency gains in the operations of the plants as well as gains in terms of reductions that oil and especially gas would have contributed. Many other countr ies have continued to create more watts with fewer carbon molecules. The powerful underlying evolution of the energy system from coal to oil to natural gas to nuclear or other carbon-free primary sources will bring reductions.16 The world appears a bit past the middle point of a decarbonization process that will take another 150 years for completion. The United States will not long remain apart from the global movement.

Electricity production was originally based on coal alone. At present, it is the only outlet for coal. Even steel-making, which historically consumed a substantial fraction of coal (sometimes more than 10 percent), abandoned coal, dropping demand. Coal will fight hard to keep its last customer. Interestingly, electricity was never linked to oil, one of the other major transforming technologies of the twentieth century. Electricity and oil may now begin to compete seriously for the transport market, as we discuss later. Natural gas is already penetrating the electrical system thanks to the great flexibility, low capital cost, quick starts, and efficiency of gas turbines. At present, electricity remains the only product of the nuclear system. Approaching an energy system with zero emissions, about which all environmentalists dream, will require nuclear to diversify into the hydrogen-making business. The team of electricity and hydrogen can eventually solve all the problems of pollution at the level of th e end-user of energy.

Electrical systems can add visual pollution with their network of towers, wires, and poles. Militant Greens already dynamite pylons and will accept no new structures. New technologies can increase the capacity of the existing lines and diminish intrusi ons. In this regard, direct current, now ultra-high, may have a second life as a bulk carrier aided by relatively cheap electronics, such as thyristors, which are capable of transforming all types of units of electricity into all others. Burying power lin es might beautify the landscape, as well as lessen fears about the health effects of electromagnetic fields.

Feeding the Electrical System

A growing share of primary fuels generates electricity; again, two waves are evident (Figure 8). At the world level, the first centered in 1920 and the second in 1970. The present wave is saturating at close to 40 percent. For the U nited States, the current wave appears to have saturated at about the same level.

Figure 8. Percentage of World Primary Fuels Converted to Electricity.Data Source: Nebojsa Nakicenovic, personal communication, 1995.

Is there a limit to the fraction of fuels feeding into the electrical system? Many energy buffs postulate a ceiling at around 50 percent. A third era of electrical growth does seem likely to occur. Electricity is more flexible and fungible than hydroca rbon fuels. The innumerable devices of the information revolution require electrical power. The transport sector, which has remained largely reliant on oil, could accept more electricity. But the drawbacks are the inefficiencies and the costs of the trans formation.

Inefficiencies are eventually eaten up.17 A successful society is, after all, a learning system.18 In fact, perhaps the greatest contribution of the West during the past three hundred year s has been the zeal with which it has systematized the learning process itself through the invention and fostering of modern science, institutions for retention and transmission of knowledge, and diffusion of research and development throughout the econom ic system. But learning may still go slowly when problems are hard.

The Six-Hundred-Year War for Efficiency

The degree of difficulty for society to learn about power and light shows quantitatively in the duration of the process improvements illustrated in Figure 9. Technologists fought for three hundred years to bring the efficiency of s team power generation from 1 percent in 1700 to about 50 percent of its apparent limit today. Electrical energy is glorified as the purest form of free energy. In fact, the heat value of other fuels when they burn also corresponds to free energy. Thus, th e thermodynamic limit of electric generators is 100 percent. Of course, it can be very difficult to reduce losses in combustion. Still, we may muse that during the next three hundred years efficiency will go to 99 percent.19 This long trajectory suggests that the structure upstream for power generation does not leave much room for spectacular breakthroughs.

Figure 9. Improvement in the Efficiency of Motors and Lamps Analyzed as a Sigmoid (logistic) Growth Process. Note: Shown in a linear transform that normalizes the ceiling of each process to 100 percent. Main Sources of Data: for lamps, Encyclopaedia Britannica, 1964; for motors, Hans Thirring, Energy for Man (Bloomington, Ind.: Indiana University Press, 1958).

Still, 70-percent efficiency can be eyed as the next target, to be achieved over fifty years or so. Turbosteam plants with an efficiency of about 60 percent have been constructed. Although further gains in this regard appear limited, the massive diffus ion of highly efficient turbine technology is sure to be a lucrative and influential feature of the next fifty years or so. Fuel cells, avoiding the free energy loss almost inevitable in the combustion process on which turbines rely, may well lead to the even higher efficiencies. Electrochemistry promises such technology but mentally seems more or less still stuck in Edison’s time. Perhaps solid-state physics can produce the insights leading to the needed leap forward as specialists in this field become m ore interested in surfaces, where the breakthroughs need to occur.

At the 70-percent level of efficiency, an almost all-electric distribution of primary energy looks most appealing. The catch is the load curve, which seems likely to remain linked to our circadian rhythms. In Cole Porter’s song lyric, we hear “Night an d day, you are the one”; but in energy systems night still dims demand and means expensive machinery remains idle. Even in cities famous for their nightlife, nocturnal energy demand is only one-third of the daytime requirement. The ratio of day to night a ctivity does not seem to have changed much. The ancients actually spent considerable time awake at night, despite miserable illumination. The fine old word “elucubrate” means to work by the light of the midnight oil, according to the Oxford English Dic tionary.

Even if most humans continue to sleep at night, we have pointed out earlier that their energy-consuming machines can work nocturnally. In fact, remote control and the shrinking work force required to operate heavy industry ease the problem. So, too, wi ll linking parts of the globe in sun and shade, summer and winter.

Still, we should clearly look further for efficiency gains. Much large electrical machinery is already so efficient that little or no gain is to be expected there. But a discontinuous step could yet come in the progress of machinery. Superconductivity, when it permits high magnetic fields, can lead to compactly designed motors with broad applications and very low energy losses. The proliferation of numerous micro-machines will of course tend to raise electricity demand, partially offsetting the efficie ncy gains they offer. The miniaturization of circuits and other aspects of computing systems in the past two decades shows how powerfully reducing the size of objects can increase their applications and numbers.

The Splicer

In proposing a more general solution we need to introduce another consideration, namely, reliability. The main drawback of an electrical system is that it permeates the web of social services, so that a breakdown, even for a few hours, can bring traged y. A defense against this vulnerability, as well as a means of addressing cyclical loads, could come with the diffusion of multipurpose minigenerators at the level of individual consumers. In effect, we would delegate base load to the global system, leavi ng peaking and standby to a new multipurpose household appliance. Multipurpose means the device could produce heat, electricity, and cold on demand.

Such combined thermal, electric, and cooling systems, which we will call “splicers,” are under development. Attempts so far, such as the FIAT TOTEM, have been unsuccessful, in part because the marketed models lack the basic characteristic of zero ma intenance required by household gadgets. Still, the scheme is appealing, both functionally and economically. The Japanese are doing a sizable amount of research and development in what appears to be a promising direction: stirling engines with free-fl oating pistons and a power output of a few kilowatts. The machines are maintenance-free, silent, and can compress fluids for the heating and cooling cycles on top of producing electricity with linear oscillating generators. The models described in the lit erature are powered by natural gas.

In conjunction with a clean gas distribution system, the penetration of the splicer as a home appliance over the next fifty years could revolutionize the organization of the electrical system. The central control could become the switchboard of million s of tiny generators of perhaps 5 kilowatts. Electric utilities might initially abhor the technology that brings such functional change, but already some plan to use it. One attraction is that the final user immediately pays the capital cost.

In any case, the breakthroughs may come instead on the side of the consumers. A number of well-known machines and appliances need technological rejuvenation, as efficiencies are systematically low. And new machines need to be invented. At a high level of abstraction, human needs are invariant: food, clothing, shelter, social rank, mobility, and communication (a form of mobility where symbols move instead of persons or objects). Let us guess the shape of the new machines in the areas of vision and warmt h.

Efficient Vision

Illumination, the first brilliant success of electricity beyond powering the workshop, provides a good example. Breaking the rule of the night is an old magical dream. The traditional tools-oil lamps, torches, and candles-were based on a flame w ith relatively low temperature and small amounts of incandescent soot to emit the light. They performed the task poorly (see Figure 9).20 The typical power of a candle is 100 watts, but the light efficiency is less than 0.1 percent.

Electricity fulfilled the dream, almost from the beginning, with arc lights, whose emitting source was solid carbon at temperatures of thousands of degrees centigrade.21 The light was as white as the sun, and efficiency reached about 10 percent. The technical jump was enormous. Theaters, malls, and monuments were lavishly illuminated. People were seduced by the magic. Amusement parks such as Luna Park and Dreamland at Coney Island in New York drew millions of paying visi tors to admire the architectural sculptures of light.

Edison’s 1879 incandescent lamp was a trifle inferior to the arc in light quality and efficiency but was immensely more practical. Symbolically, in 1882 the New York Stock Exchange installed three large “electro-liers,” new chandeliers with sixty-six e lectric lamps each, above the main trading floor. The exhibition of the power to break the night came first and dramatically. Penetration of the technology came later and, as usual, slowly. US cities, as shown earlier, achieved full illumination only abou t 1940.

The period from 1940 to 1995 can be called a period of consolidated light. Lamps became brighter and efficiency rose. To the human eye, the quality of the light may actually have worsened with the spread of fluorescents. With laser light, which has ter rible visual quality now, we may approach theoretical efficiency, though actual lasers remain inefficient. Will that be the light at the end of the tunnel?

To return to basics, we illuminate in order to see in the dark. Illumination has no value if nobody looks. Arriving in a town at night, we always see the roads brightly lit and empty, so we know of waste. The marvels of the 1980s, electronic sensors an d computer chips, can already scan rooms and streets and switch the lights off if no one is present. The watt-watch can help, but we can go further.

Sophisticated weapons systems-those mounted in helicopters, for example-feel the thumb of the pilot, observe his eyes, and shoot where he looks. A camera-computer in a room can watch the eyes of people present and illuminate only what they watch. Phase d arrays, familiar in sonars and radars and developed now for infrared emitters, are certainly transportable into the visible range and can create sets of beams that are each directed to a chosen point or following a calculated track. The apparatus might now look baroque, but with miniaturization it could be concealed in a disk hanging from the ceiling of a room. Such a gadget appears to be the supreme fulfillment, illuminating an object only if a human gazes upon it.

But recall again that the objective is not to illuminate but to see. We illuminate because the eye has a lower limit of light sensitivity and, in any case, operating near such a limit is unpleasant. The military has developed complicated gadgets by whi ch scanty photons from a poorly illuminated target are multiplied electronically to produce an image of sufficient luminosity. The principle is good; the machine is primitive. If photons flowing in an energized medium (such as an excited laser crystal) mu ltiplied in a cascade along the way while keeping frequency and direction, we would have invented nightglasses, the mirror image of sunglasses.22 We could throw away all sorts of illuminating devices. A few milliwatt s of power would be enough to brighten the night.

Efficient Warmth

The largest part of energy consumed in the home is used for temperature control. Space heating accounts for 60 percent or more of total residential energy use in many developed countries. Heating a home is a notably inelegant process from a thermodynam ic point of view. We use pure free energy (electricity or fossil fuels) to compensate for a flow of energy from inside to outside having an efficiency according to the Second Law of Thermodynamics of about 3 percent if the difference in temperature is 10< SUP>oC. Heat pumps solve the problem conceptually, but they see temperatures inside their heat exchangers and consequently overwork.23 Moreover, operating on electricity generated upstream, they already invite inefficiency into the endeavor.

Consider a radically different proposal. Windows are the big leaks, even when the glazing is sophisticated and expensive. Why not use window panes as thermoelectric devices, not to carry heat uphill but to stop heat from sledding downhill, that is, as heat-flux stopping devices?

Thermoelectric generators are usually seen as machines to make electricity by using the principle of the thermocouple. However, the device is reversible: by passing electricity through the machine, heat can be moved uphill. Several decades ago refriger ators were proposed using this principle on the basis of its great simplicity, although efficiencies are low. The old scheme for refrigerators could be revised in view of new thermoelectric materials and given suitably competitive objectives.

The basic idea is that electrodes on the inner and outer surfaces of the windowpanes can be made of conductive, transparent glasses. Glass made of zinc oxide might be sufficiently conductive. Voltages across the glass would be very low-volts or fractio ns of volts. Holding a temperature differential with zero flux would be more efficient energetically than putting heat (electrically!) into a house to balance the outgoing flux.

Electric Motion

So far we have looked at examples where efficiency wins, and net demand for power grows, only if the human population and its use of devices increase faster than efficiency. Now let us look at one example where a large new market might emerge, matching the ultra-high voltage lines and continental connections.

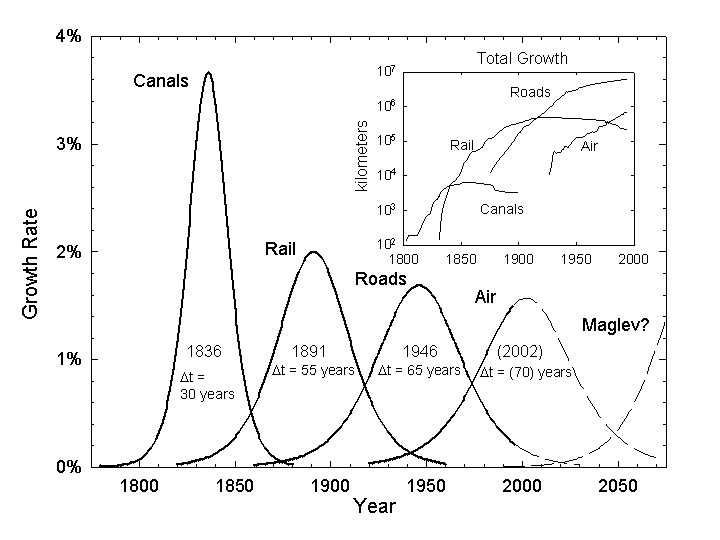

Toward the end of the last century electric motors for vehicle engines attracted much inventive action. Edison and Ferdinand Porsche produced sophisticated prototypes. The idea flopped on the roads but succeeded on the rails. Electric trams clamored th rough American and European cities, helped create suburbs, and in some cases connected cities. After 1940 most of the system was rapidly dismantled, largely because the trams could not match buses and cars in flexibility or speed. The mean velocity of tra nsport keeps increasing through the progressive substitution of old technologies with new, faster ones. For France, the increase in the average speed of all machine transport has been about 3 percent per year during the last two centuries. Urban and subur ban railways have a mean speed of only about 25 kilometers per hour, including stops. Cars have a mean speed on short distance trips of about 40 kilometers per hour. The latest in the series are airplanes, with a mean speed of 600 kilometers per hour. Air planes will provide most of the increase in mean speed over the next fifty years.

Electric trains succeeded in Europe and Japan for the densely trafficked lines and still operate today. They have decent acceleration and speed compared with diesels. But most trains are not fast; the inclusive travel time on intercity rail journeys is only about 60 kilometers per hour. The fastest trains, the French trains à grande vitesse (TGVs), are electric. The question for trains is how to compete with cars on one side and with airplanes on the other. Electricity probably cannot com pete with hydrogen for propulsion of cars and other light vehicles.

The great market challenge for the current generation of fast trains, with top speeds of 400 kilometers per hour, is the short distances of less than 100 kilometers along which cars congest and airplanes cannot compete. The present configuration of air ports and airplanes are high-speed but low-flux machines. TGVs could prove extremely competitive in the intense shuffling of commuters and shoppers within these distances. A cursory review of Europe reveals about 5,000 kilometers of intercity links fittin g the constraints of a 100-kilometer distance and high potential passenger flux.

Fast trains consume more or less the same amount of primary energy per seat-kilometer as a turboprop plane24 or a compact car. From the power point of view, a running TGV absorbs about 10 kilowatts per seat. The mean pow er demand of the proposed 5,000-kilometer system of TGV trains for commuters and shoppers would be around 6 gigawatts, with a peak of probably 10 gigawatts. If the concept is successful, this form of transport will be an important consumer of electricity, but it will take at least fifty years to become fully implemented.

To go to very high passenger fluxes over longer distances, one would need to go to aerial configurations of which even the most daring air-transport planners do not chance to dream: flocks of airplanes of five thousand passengers each taking off and la nding together like migrating birds.

For intense connections linking large cities with peak fluxes around ten thousand passengers per hour, a solution is emerging that matches system requirements: the magnetically levitated (maglev) train operating in a partially evacuated tube or tunnel. In fact, Swiss engineers have developed the concept of a vacuum version of maglevs in part to reduce drastically the tunnel boring expenses, which in Switzerland would account for at least 90 percent of the cost in a conventional layout. 25 To handle the shock wave from a high-speed train, a tunnel normally needs a cross section about ten times that of the train. In addition to narrowing greatly the tunneling requirement, the partial vacuum greatly reduces friction, making speed cheap and thus expanding the operational range of the train.

When operated at constant acceleration-for example, 5 meters per second or 0.5 g (the force of gravity), about what one experiences in a Ferrari sports car-maglevs could link any pair of cities up to 2,000 kilometers apart in fewer than twenty minutes. Consequently, daily commuting and shopping become feasible. Such daily trips account for 90 percent of all travel and are controlled by the total human time budget for travel of about one hour per day. With fast, short trips cities can coalesce in functi onal clusters of continental size. City pairs spaced less than 500 kilometers or ten minutes apart by maglevs, such as Bonn-Berlin, Milan-Rome, Tokyo-Osaka, and New York-Washington, would especially benefit.

Part of the energy consumption of vacuum maglevs overcomes residual friction; an economic balance must be struck between the friction losses and the pumping power to keep the vacuum. Part regenerates the electromagnetic system that pushes and pulls the trains.26 The power per passenger could roughly correspond to that of a large car, although these trains may travel at a mean speed of 3,000 kilometers per hour.

The great advantage of the constant acceleration configuration for maglevs is that the energy required for each length of track is constant and could be stored, perhaps magnetically, in the track itself. Power demand is proportional to train speed and moves into the gigawatt range in the central section; however, with local storage (a few kilowatt hours per meter) the external electric power networks would see only the need to make up losses. Even assuming 90-percent efficiency, these would not be negl igible. One hundred trains per hour would demand 1 gigawatt for the single line on which they operated.27 The Swiss system has a final potential of five hundred trains per hour, which would require 5 gigawatts-about one-thi rd of current installed Swiss generating capacity.

The first long-distance maglev will probably run in about five to ten years. Berlin-Hamburg is under construction. The penetration of the technology will be gradual, as major infrastructural technologies always are. In fact, the next fifty years will p robably be used largely to establish the feasibility, chart the maglev map, and prepare for the big push in the second half of the twenty-first century. In the long run, maglevs may establish several thousand kilometers of lines and become one of the most important users of electricity. A maglev trip per day becomes a few thousand kilowatt hours per year per person. If India and Eastern China join life in this superfast lane, the picture of a globally integrated, high-capacity electrical system begins to cohere.

Conclusions

The long economic cycles that seem to affect all parts of social and economic life constitute a good frame of reference for the development of the electrical system in terms of technology, territorial penetration, birth and death of enterprises, and in tensity of use. Our examples suggest this is true for the United States and globally.

Two waves of electrification have passed through our societies. In the first, the United States attained system saturation in the 1930s at about 1,000 kilowatt hours annual consumption per residential customer, 200 gigawatt hours of total annual use, 4 0 gigawatts of installed capacity, and 20 percent of primary fuels producing electricity. In the second wave, we have reached 10,000 kilowatt hours per residential customer, 3,000 gigawatt hours of total use, 800 gigawatts of installed capacity, and about 40 percent of fuels producing electricity.

The fact that the patterns of temporal diffusion and growth are followed makes it possible to fit dynamic equations to the time series of facts and then compare them for consistency. This operation indicates that the 1990s are the season of saturation, which includes the experience of overcapacity or, alternately, underconsumption. Such phases are not uncommon for various branches of the industrial system, as managers tend to assume that growth characteristics of boom periods will extend into recession s, while consumers cut corners.

In the short term, total energy and electric energy consumption may continue to grow at a slower rate than overall economic activity. One interpretation is that during the expansion period of the long cycles the objective is growth, while during the re cessive period the objective is to compete, shaving costs here and there and streamlining production. The savings include energy. Meeting goals pertaining to environmental quality and safety further tighten the system.

A new cycle formally beginning in 1995 started the game again, although the effects of the restart will not be particularly visible for a few years. Minima are flat. Looking at the cycles from a distance to grasp the general features, one sees the peri ods around their ends as revolutionary, that is, periods of reorganization-political, social, industrial, and institutional. We are evidently at this conjunction, and the electrical system will not escape it.

When the electrical system served the village, a complete vertical integration was inevitable. Regional coverage, the preferred scale of the past fifty years, also favored such integration. With the expansion to continental dimensions, a shift in respo nsibilities may make the system more efficient, agile, and manageable. The typical division is production, trunk-line transport, and retailing, with different organizations taking care of the pieces and the market joining them. The experiments in this sen se now running in Great Britain, Australia, and other countries can be used as a test bed to develop the winning ideas.28

Apart from various economic advantages and organizational complications, the use of splicers on a large scale-untried to date-may bring an almost absolute resiliency, as every subset of the system may become self-sufficient, if temporarily. The electri cal system should also become cleaner, as it intertwines more closely with natural gas and probably nuclear energy, thus furthering decarbonization. A sequence of technical barriers will appear, and thus the process of systematic research and innovation w ill continue to be needed; it will produce timely results.

In fact, our analyses suggest that rates of growth of technology tend to be self-consistent more than bound to population dynamics. Population, however, defines the size of the niche in the final instance. Thus a key question is, how long will it take to diffuse Western electric gadgetry to the 90 percent of the world that is not already imbued with it? The gadgetry keeps increasing. Followers keep following, if more closely. Based on historical experience, diffusion to distant corners requires fifty t o one hundred years. Even within America or Europe, as we have seen, pervasive diffusion takes that long for major technologies. So most people may have to wait for most of the next century to experience nightglasses, splicers, and maglevs. These devices may be largely features of a fourth wave of electrification, while the spread of the profusion of information-handling devices dominates the third wave that is now beginning.

Considered over centuries and millennia, the electrical adventure is deeper than a quest for gadgets. In 1794 Volta demonstrated that the electric force observed by Luigi Galvani in twitching frog legs was not connected with living creatures, but could be obtained whenever two different metals are placed in a conducting fluid. Today we use electricity to dissolve the difference between inanimate and living objects and to control and inspire the inanimate with more delicacy than Dr. Frankenstein. Introd ucing electricity into production raised the rank of workers from sweating robots to robot controllers. The process can be generalized, with humanity-at leisure or at work-giving orders to its machines by voice or a wink of the eye.

This ancient aspiration for action at a distance and direct command over the inanimate will drive invention, innovation, and diffusion for hundreds of years more; we come full circle to the elektron of the ancient Hebrews and Greeks.

Acknowledgments

We thank Perrin Meyer, for research assistance and figure preparation, as well as Arnulf Grübler, John Helm, Eduard Loeser, Nebojsa Naki¬enovi¬, and Chauncey Starr.

Endnotes

1“Oh subtle fire, soul of the world, / beneficent electricity / You fill the air, the earth, the sea, / The sky and its immensity.” Quoted in Robert Darnton, Mesmerism and the End of the Enlightenment in France (Cambridge, Mass.: Harvard University Press, 1968), 29.

2Edgar Allan Poe, The Science Fiction of Edgar Allan Poe (New York: Penguin, 1976).

3For general histories of electrification, see Thomas P. Hughes, Networks of Power: Electrification in Western Society (Baltimore, Md.: Johns Hopkins University Press, 1983); Richard F. Hirsch, Technology and Transformation in the American Electric Utility Industry (Cambridge: Cambridge University Press, 1989); David Nye, Electrifying America (Cambridge, Mass.: MIT Press, 1990); Wolfgang Schivelbusch, Disenchanted Night: The Industrialization of Light in the Nineteenth Century (Berkeley, Calif.: University of California Press, 1988); and Sam H. Shurr, Calvin C. Burwell, Warren D. Devine, Jr., and Sidney Sonenblum, Electricity in the American Economy: Agent of Technological Progress (Westport, Conn.: Greenwood Press, 1990). For data and information on the early history of energy and electricity, see H. D. Schilling and R. Hildebrandt, Primarenergie-Elektrische Energie, Die Entwicklung des Verbrauchs an Primarenergietragern und an Elektrischer Energie in der Welt, in den USA und in Deutschland seit 1860 bzw. 1925 (Essen: Vertrag Gluckauf, 1977).

4Henry Adams, The Education of Henry Adams: An Autobiography (Boston, Mass.: Massachusetts Historical Society, 1918; reprinted Boston, Mass.: Houghton Mifflin, 1961).

5Such diffusive processes are well fit by the logistic equation, which represents simply and effectively the path of a population growing to a limit that is some function of the population itself. For discussion of applications of logistics, see Nebojsa Nakicenovic and Arnulf Grübler, eds., Diffusion of Technology and Social Behavior (Berlin: Springer, 1991). On the basic model, see S. Kingsland, “The Refractory Model: The Logistic Curve and the History of Population Ecology,” Quarterly Review of Biology 57 (1982) : 29-52.

6A kilowatt (kW) is 1,000 watts; a megawatt (MW) is 1,000,000 W; a gigawatt (GW) is 1,000 MW; a terawatt (TW) is 1,000 GW. US generating capacity was 735 GW in 1990.

7Power is equal to V2/R, where V is voltage and R is resistance.

8John Winthrop Hammond, Charles Proteus Steinmetz: A Biography (New York and London: The Century, 1924).

9Chauncey Starr, “A Personal History: Technology to Energy Strategy,” Annual Review of Energy and the Environment 29 (1995): 31-44.

10Cesare Marchetti, “Fifty-Year Pulsation in Human Affairs: Analysis of Some Physical Indicators,” Futures 17 (3) (1986): 376-388.

11For an analysis of electricity projections, see Charles R. Nelson, Stephen C. Peck, and Robert G. Uhler, “The NERC Fan in Retrospect and Prospect,” The Energy Journal 10 (2) (1989): 91-107.

12See Lee Schipper, “Life-Styles and the Environment: The Case of Energy,” Dædalus 125 (3) (Summer 1996).

13Jesse H. Ausubel and Arnulf Grübler, “Working Less and Living Longer: Long-Term Trends in Working Time and Time Budgets,” Technological Forecasting and Social Change 50 (3) (1995): 195-213.

14Peggy L. Jenkins, Thomas J. Phillips, Elliot J. Mulberg, and Steve P. Hui, “Activity Patterns of Californians: Use of and Proximity to Indoor Pollutant Sources,” Atmospheric Environment 26A (12) (1992): 2141-2148.

15Sulfur and other emissions from power plants also cause ills, but these have proven to be largely tractable. See Nebojsa Nakicenovic, “Freeing Energy from Carbon,” Dædalus 125 (3) (Summer 1996).

16Jesse H. Ausubel, “Energy and Environment: The Light Path,” Energy Systems and Policy 15 (3) (1991): 181-188.

17N. Nakicenovic, L. Bodda, A. Grübler, and P.-V. Gilli, Technological Progress, Structural Change and Efficient Energy Use: Trends Worldwide and in Austria, International Part (Laxenburg, Austria: International I nstitute for Applied Systems Analysis, 1990).

18Cesare Marchetti, “Society as a Learning System,” Technological Forecasting and Social Change 18 (1980): 267-282.

19While Carnot efficiency (now about 60 percent) limits heat cycles, fuel cells do not face such a limitation, as they are not based on heat cycles.

20Gaslight, with a mantle with rare-earth elements, was a superior source of bright light for a period.

21The plasma struck between the two carbon electrodes also emits.

22Sticking to monochromatic light, a ray proceeding in a resonantly excited medium stimulates emission and becomes amplified. Amplification is relatively small with present devices; hence the ray must travel up and down betwe en mirrors. But no physical law limits amplification to such low levels. Semiconductor lasers, pumped by electric voltage, might hold the solution. In a second stage, they should also operate for a number of colors.

23The equivalent free energy of heat flowing out of a building is measured through the temperatures inside (T1) and outside (T2) in kelvin and is (T1-T2)/T1. In the case of a heat pump, due to temperature drops in the heat exchanger, it pumps heat from a temperature lower than T2 into a temperature higher than T1.

24For example, airplanes of the type ATR-42 or Dash.

25R. Nieth, W. Benoit, F. Descoeudres, M. Jufer, and F.-L. Perret, “Transport interregional à grande vitesse-Le Project SWISSMETRO,” Ecole Polytechnic Federale de Lausanne, 1991.

26We can calculate the amount of energy circulating in the system for a maglev with constant acceleration operating over a distance of 500 kilometers. A train of 200 metric tons accelerating at 0.5 g has a pull force (drag) of 1,000 kilonewtons, which over a stretch of 500 kilometers corresponds to 5 x 1011 joules, or approximately 140,000 kilowatt hours. A mean loss of 10 percent would require 14,000 kWh for one thousand seats, or 14 kWh per seat over 500 km. This would correspond to 84 kW per passenger at a typical trip time of 10 minutes (e.g., Bonn to Berlin).

27For example, fifty trains might operate in each direction, spaced one minute apart. They can start from different stations or lanes. One per minute would be the frequency in the neck of the tunnel.

28For discussions of models of organizational change in the electricity industry, see Judith B. Sack, research memorandum series on “Global Electricity Strategy” (New York: Morgan Stanley), especially “The Darwinian Theory of Distribution” (12 December 1995), and “An Interrelated World” (29 February 1996).

Jesse H. Ausubel is Director of the Program for the Human Environment at The Rockefeller University.

Cesare Marchetti is an Institute Scholar at the International Institute for Applied Systems Analysis in Laxenburg, Austria.

Technology and environment: An overview

Environmental trends

The Environment for Future Business

Efficiency will win

In this article, I would like to share some surprising insights into the long-term evolution of the human environment and technology that may help diverse industries to do their jobs better. Indeed, absorbing some of these insights may determine which firms survive.

My points are: Demand for many primary products, or natural resources, will drop, in the USA and other important markets. In other words, efficiency will win. Pollution will plummet. Many firms’ emissions already have. We are going to live on a green planet with abundant land for nature.

As will become evident, these developments are not discontinuities or revolutions. Rather, the wheels of history are rolling in the direction of prudent, clean use of resources. Those who understand the dynamics can make money from them, too.

Usually we hear from environmental scientists and activists about deforestation, loss of arable land, water scarcity, and exhaustion of hydrocarbon fuels. The trumpets blare that, a population grows from six to 10 billion over coming decades, humans will demand so much of everything that prices will rocket, squabbles over access to resources will turn to wars, and a bath of pollution will burn us all.

In contrast, I believe that society is a learning system – and that we have been learning to become much more efficient. Pollution and waste usually indicate inefficiency. In an economy of competing companies, inefficiency is for losers. So, over the long run, successful companies are going to be green and clean.

A tour of the major natural resources – energy, land, water, and materials – justifies my confidence. Accordingly, this article surveys the trends in the use of these resources over the last century or two, globally and in the United States.

Along the way, it is important to keep in mind three paramount facts about the economy:

- Evolution is a series of replacements. Products, performers (usually companies), and technologies substitute for one another in the market in a search for inclusive fitness.

- The struggle is bloody. Products, performers, and technologies, indeed whole systems of doing things, lose and die.

- The struggle is episodic or cyclical, in many instances. In particular, long cycles or pulses of about 50 years punctuate the evolution of the economy. We happen to be at the start of a new cycle now.

Energy

Gains in energy productivity and efficiency astonish. Consider the gains for motors and lamps, pictured in Exhibit 1 on a logarithmic scale as the fraction of the limit of efficiency they might obtain. In about 1700, the quest began to build efficient engines, starting with steam. Three-hundred years have increased the efficiency of the generators from one percent to about 50 percent of their apparent limit, the latter achieved by today’s best gas turbines, made by General Electric. Fuel cells, which will power our cars in 20 to 30 years, can advance apparent efficiency to about 70 percent.

The efficiency data for engines and lamps are plotted along a line fitted by a logistic equation. The scale used renders the conventional S-shaped curve of the logistic equation into a straight line. Source: Ausubel and Marchetti, Daedalus 125(3), 1996.

Lamps have brightened with each decade. At the outset of the 1990s, the Photonics Lab of the 3M Company announced an advance in short-wavelength solid-state light emitters in the blue and green spectral regions using zinc-selenium lasers. These could significantly advance efficiency, penetrating the market for displays and then reaching into other commercial applications.

Analyses of the evolving efficiency of the full energy system show that the United States has averaged about one percent less energy to produce a good or service each year since about 1800. However, our modern economies still probably run at only about five-percent efficiency for the full chain from extracting primary energy to delivery of the service to the final user. Fifty-percent efficiency at each of four links in a chain, after all, produces six-percent efficiency for the chain as a whole.

For the environment, efficiency with respect to use and leaks of carbon matters greatly. Carbon darkens the environmental outlook by threatening oily beaches, smoggy air, overheated climate, and black lungs. Happily, the most important single fact to emerge from 20 years of energy analyses is the gradual “decarbonization” of the energy system, the falling number of carbon molecules used to provide a unit of energy or economic product.

In 1860, globally, about 1.1 tons of carbon went into the primary energy produced by the energy equivalent of one ton of oil then in the fuel mix; the amount has decreased monotonically to about 0.7 tons in 1990. Exhibit 2 details the shrinking carbon used for final energy to the consumer in diverse countries in the last few decades. Efficiency is much higher in the richer countries, whose firms more readily discern inefficiency as a market opportunity and can parlay the expertise and capital to reduce it.

Source: Nakicenovic, Daedalus 125(3), 1996.

This decarbonization partly reflects that new motors and light bulbs get more out of the fuel they use. It also reflects the substitution of fuels that are progressively lighter in carbon. I noted above that evolution is a series of replacements. In fact, we can view the process of decarbonization as the replacement of carbon with hydrogen as the source of chemical energy (see Exhibit 3). Economizing on carbon, we are on a steady trajectory toward a methane, and eventually hydrogen, economy.

The ratio of H to C is plotted along a line fitted by a logistic equation. The scale used renders the conventional S-shaped curve of the logistic equation into a straight line.

Source: Ausubel, American Scientist, March-April 1996.

A grand substitution of leading energy sources has taken place over the past century and a half for the world: from wood and hay, to coal, to oil, and now to natural gas. “Oil” companies such as Shell and Mobil affirm it in the investments they now favor. The progression of fuels has sequentially supported a higher spatial density of consumption. Effectively, each new leading fuel is superior from an environmental point of view.

Wood and hay, prevalent at the start of the 19th century, were bulky and awkward. Consider if every high-rise resident needed to keep both a half-cord of wood at hand for heat and a loft of hay for the Honda. Think of the deforestation this would cause – directly for the fuelwood, and indirectly from the land needed for hay.

Coal had a long run at the top, notwithstanding its devastating effects on miners’ lungs and lives, urban air, and the land from which it came. Then, around 1900, the advantages of a system of fluids rather than solids became evident. Coal-powered autos never had much appeal. The weight and volume of the fuel were hard problems.

Oil has a higher energy density than coal, plus the advantage of a form that allows it to flow through pipelines and into tanks. Systems of tubes and tins can deliver carefully regulated quantities from the scale of the engine of a motor car to that of the Alaska Pipeline. But transfer between tins is imperfect, and the tubes and tins puncture. The spills make headlines.

In contrast, an inconspicuous, pervasive, and efficient system of pipes distribute natural gas. Its capillaries safely reach right to the kitchen. For gas, the next decades will be a time of relative and absolute growth. Gas is the penultimate fuel, the best until hydrogen, whose combustion product is water rather than carbon dioxide. Nuclear plants remain the best long-run candidate to manufacture the hydrogen, but perhaps solar will learn to compete.

Before making “neat” hydrogen, the next step is “zero emission power plants” with supercompact, superpowerful, superfast turbines that deliver what are now combustion products in a form appropriate for injection into aquifers where they can be sequestered forever. Very high pressure CO2 gas turbines in which combustion of the fuel with oxygen inside the gas flux provides the heat should do the trick.

Looking back, we see that growth of per capita energy consumption has been keyed to cleaner fuels (see Exhibit 4). Pulses of energy growth reach economic, social, technical, and environmental limits. In past pulses, per capita energy consumption tripled before the energy services desired outgrew the old fuels or portfolio of fuels. I postulate two new global pulses, one centered on gas and then a later one centered on hydrogen. Industrial, commercial, and residential end users have also enjoyed two neatly quantifiable pulses of penetration of electricity, and two more probably lie ahead, keyed to the information revolution and later to the electrification of travel.

Total world consumption is dissected into a succession of logistic curves, again plotted on a scale that renders each S-shaped pulse into a straight line.

Source: Ausubel et al., Climatic Change 12(3):245-263, 1988.

The growth pulses, lasting 40 to 45 years, are followed by lulls or depressions of a decade or two in energy consumption. These years between the pulses, when demand is rather flat, matter greatly for industry organization because they especially reward producers who are the most efficient and lowest cost – in short, the most competitive. They often witness a big restructuring of the industry, as is happening today to electric utilities.

Global triplings of demand need not mean triplings in the U.S. and other rich countries, where higher efficiencies throughout the chain can effectively supply the already amply, but still sloppily, provided end-users.

To return to the environmental aspect, recall that the transport system mirrors the energy system. In personal transport, oil substituted for hay (that is, cars for horses). America had more than 20 million non-farm horses in 1910 and has about 200 million motor vehicles today. Imagine the pollution had the fleet stayed equine. So the energy story is efficiency and cleanliness to meet the demands of larger, denser markets, driven by competition, occurring in long cycles.

Land

More blood spills over land than any other resource. Yields per hectare measure the productivity of land and the efficiency of land use. During the past half century, ratios of crops to land for the world’s major grains – corn, rice, soybeans, and wheat – have climbed, fast and globally.

A cluster of innovations, including tractors, seeds, chemicals, and irrigation – joined through timely information flows and better organized markets – raised yields to feed billions more without clearing new fields.

Per hectare, world grain yields rose 2.15 percent annually between 1960-1994. The productivity gains have stabilized global cropland since mid-century, mitigating pressure for deforestation in all nations and allowing forests to spread again in many. The Green Revolution that led to high-yield crops earned a Nobel Peace Prize. The alternative – extending farming onto hundreds of millions more hectares – would surely have evoked deadly strife.

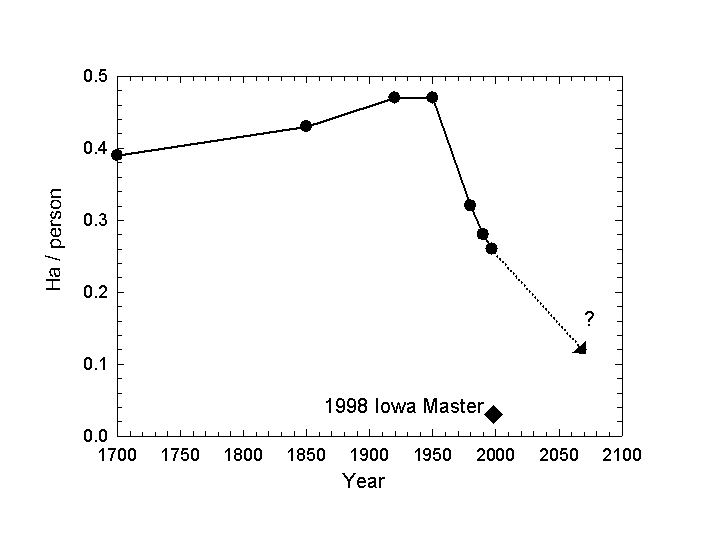

Fortunately, as Exhibit 5 shows, the agricultural production frontier remains spacious. On the same area, the average world farmer grows only about 20 percent of the corn of the top Iowa farmer, and the average Iowa farmer lags more than 30 years behind the state-of-the-art of his most productive neighbor.

Source: Waggoner, Daedalus 125(3), 1996.

Will high-yield agriculture tarnish the land? Farmers do many things on each area of land that they crop. In general, higher yields require little more clearing, tilling, and cultivating than lower yields. Protecting a plot of lush foliage from insects or disease requires only a little more pesticide than does sparse foliage. Keeping weeds from growing in deep shade beneath a bumper crop may require less herbicide per field than keeping them from growing in thin shade. The amount of water consumed is more or less the same per area whether the crop is abundant or sparse. Growing higher yields distills away only a little more water and leaves only a little more salt than lower yields.

Seed is planted per plot; choosing a higher yielding variety does not affect the surroundings. If the improved variety resists pests, it lessens the external effects of pesticides compared to a sprayed crop. By minimally changing the external effects of things that farmers do per area, lifting yields will thus lower effects per unit of yield.

On the other hand, farmers use more of some things to raise the yield of their crops. For example, farmers apply more fertilizer, especially nitrogen, per plot to raise yields. But in fact the key issue is usually the sound, complementary use of fertilizer and water. We appear to have reached the point of diminishing returns for applications of fertilizer. In America, use has been level for 15 years. Globally, use has fallen since 1985, in part because of big drops in the former Soviet bloc, where it was wastefully applied.

Globally, the future lies with precision agriculture. This approach to farming relies on technology and information to help the grower use precise amounts of inputs – fertilizer, pesticides, seed, water – exactly where they are needed. Precision agriculture includes grid soil sampling, field mapping, variable rate application, and yield monitoring, tied to global positioning systems. It helps the grower lower costs and improve yields in an environmentally responsible manner. At a soybean seminar in Dayton covered by the Associated Press on February 10, 1997, Ohio farmers reported using one-third less lime after putting fields on square-foot satellite grids detailing which areas would benefit from fertilizer.

We have had two revolutions in agriculture in this century. The first came from mechanization. The second came from agricultural chemicals. The next agricultural revolution will come from information.

If during the next 60 to 70 years, the world farmer reaches the average yield of today’s U.S. corn grower, 10 billion people will need only half of today’s cropland while being able to consume the same number of calories as Americans eat today. This will happen if we maintain the yearly 2.15% worldwide yield growth of grains achieved during 1960-1994. Even if the rate falls by half, an area the size of India, globally, will revert from agriculture to woodland or other uses. The bottom line is that farm land should become more abundant in many countries. Land prices should show it.

Water

Will water become scarce? Not if we similarly squeeze more value from each drop. Since 1975, per capita water use in the United States has fallen at an annual rate of 1.4 percent. Even absolute water withdrawals peaked about 1980.

Industry, alert to technology as well as costs, exemplifies the progress, although it consumes a small fraction of total water. Total U.S. industrial water withdrawals plateaued about 1970, and have since dropped by one-third (see Exhibit 6). Also interesting is that industrial withdrawals per unit of GNP have dropped steadily since 1940. Then, 14 gallons of water flowed into each dollar of output. Now the flow is less than three gallons per dollar.

The steep decline taps many sectors, including chemicals, paper, petroleum refining, steel, and food processing, and also reflects changes in what forms the economy. After adjusting for production levels, not only intakes but discharges per unit of production are perhaps one-fifth of what they were 50 years ago in the United States.

Sources of Data: U.S. Historical Statistics, U.S. Statistical Abstract

Technology, law, and economics have all favored frugal water use. Better management of demand reduced water use in the Boston area from 320 million gallons per day in 1978 to 240 million gallons in 1992. Incidentally, more efficient use of water and energy usually go together, through better heat-exchangers and recirculation of cooling water. And, if land used for farming shrinks, water use will also tend to fall, although the fraction that is irrigated will rise.

Despite the gains, the United States is far from the most efficient practices. Water withdrawals for all users in the OECD countries range tenfold, with the United States and Canada the highest. Allowing for national differences in the major uses (irrigation, electrical cooling, industry, and public water supply), large opportunities for reductions remain. Like enterprises supplying energy or inputs to farmers, enterprises treating and supplying water will find the emphasis in their markets on quality, not quantity.

Materials

We can reliably project more energy from less carbon, more food from less land, and less thirst with less water. What about more goods and services with less material? Let us define such a “dematerialization” as the decline over time in the weight of materials used to perform a given economic function.

Dematerialization would matter enormously for the environment. Excluding water and oxygen, in 1990 on average each American mobilized more than 50kg of materials per day (see Exhibit 7). Lower materials intensity of the economy could preserve landscapes and natural resources, lessen garbage, and reduce human exposures to hazardous materials.

Source: Wernick and Ausubel, Ann. Rev. of Energy and Environment, 1995.