Area of Research: Cities and Transportation

Lightening the Tread of Population on the Land: American Examples

Cities and Infrastructure: Synthesis and Perspectives

This article, as well as the full publication in which it originally appears, is also available on the National Academies Press website, located at https://www.nap.edu/books/0309037867/html/.

Community Risk Profiles: A Tool to Improve Environment and Community Health

Prepared for the Robert Wood Johnson Foundation

Editor: Iddo K. Wernick, Program for the Human Environment, The Rockefeller University

ISBN 0-9646419-0-9

For more information or to request reprints, please contact us at phe@mail.rockefeller.edu

Preface

This report presents the results of a one-year exploratory study on the “Environment and Community Health: Historical Evolution and Emerging Needs” sponsored by the Robert Wood Johnson Foundation. The Program for the Human Environment at The Rockefeller University conducted the study. The subject of the study was the analytical, informational, and service delivery framework for meeting needs in health care and environmental protection using the community as the focal point or, by medical analogy, the “community as the patient.”

To broaden the base of knowledge and professional contacts available to the project, we formed a Steering Group including members experienced in public health and environment, government, community organization, and information technologies and services (Appendix A). Prior to the first meeting of the Steering Group, we devised a framework to raise relevant issues, better define the most fruitful lines of inquiry, and map out the future course of the project. The Steering Group met on April 19, 1994 at The Rockefeller University. The members resolved to examine three basic questions and commission case studies to explore them in the context of specific communities in the United States.

The three questions were

— What is the current status of deliberative processes for risk assessment at the level of the community?

— How can governments, independent and private sector groups, and researchers better use information technologies to access, integrate, and disseminate information about health and environment and related concerns at the local level?

— What policy levers can government use to ensure that communities better address remediating local environmental hazards and improve the efficacy of local health care delivery?

To conclude the definitional phase of the project, Iddo Wernick drafted a discussion paper with the assistance of the Steering Group articulating the problems so far uncovered and providing the orientation for further work (pages 25-34). Planning for a larger Forum began, and two case studies were formally commissioned to be presented at the Forum. Theodore Glickman, a Senior Fellow at the Center for Risk Management of Resources for the Future (RFF), prepared a report on environmental equity in Allegheny County, Pennsylvania, using a Geographical Information System (GIS) as the framework. Lenny Siegel, Director of the Pacific Studies Center in Mountain View, California, reviewed the recent history of community efforts in addressing environmental problems in Silicon Valley, California, and described a process for developing community risk profiles based on his experience working as a community activist with federal, state, and local governments.

The two case studies, “Evaluating Environmental Equity in Allegheny County” (pages 35-62) and “Comparing Apples and Supercomputers: Evaluating Environmental Risk in Silicon Valley” (pages 63-79) formed the core of the agenda for the Forum on Environment and Community Health, held on September 20, 1994 at The Rockefeller University. The Forum included professionals from the public and private sectors with expertise in public health, environment, and community services (list of participants, Appendix A). Background reading, sent prior to the Forum, oriented participants to the purposes of the project (see Bibliography and Suggested Reading).

This report presents the main findings of the exploratory study. We stop short of costing out its main recommendation, an obvious next step.

Drafted initially by Iddo Wernick, this report synthesizes the informed contributions offered by the many people who have been a part of the study. Reflecting our backgrounds in environment, we tend to offer more detail on environment than health. The Forum participants have reviewed this report, and the Steering Group members have reviewed and approved it.

We thank Doris Manville for her assistance in organizing and administering the project. We wish to thank other people with whom we consulted during the project including Mark Schaefer, Assistant Director for Environment, White House Office of Science and Technology Policy; Margaret Hamburg, M.D., Commissioner, New York City Department of Health; Debora Martin, U.S. Environmental Protection Agency; and Kenneth Jones and colleagues at the Northeast Center for Comparative Risk.

Jesse H. Ausubel

Director, Program for the Human Environment

Iddo K. Wernick

Research Associate, Program for the Human Environment

Cutting the volume of traffic on island

Death and the Human Environment: The United States in the 20th Century

AN INTRODUCTION TO DEADLY COMPETITION

Our subject is the history of death. Researchers have analyzed the time dynamics of numerous populations-nations, companies, products, technologies–competing to fill a niche or provide a given service. Here we review killers, causes of death, as competitors for human bodies. We undertake the analysis to understand better the role of the environment in the evolution of patterns of mortality. Some of the story will prove familiar to public health experts. The story begins in the environment of water, soil, and air, but it leads elsewhere.

Our method is to apply two models developed in ecology to study growth and decline of interacting populations. These models, built around the logistic equation, offer a compact way of organizing numerous data and also enable prediction. The first model represents simple S-shaped growth or decline.[1] The second model represents multiple, overlapping and interacting processes growing or declining in S-shaped paths.[2] Marchetti first suggested the application of logistic models to causes of death in 1982.[3]

The first, simple logistic model assumes that a population grows exponentially until an upper limit inherent in the system is approached, at which point the growth rate slows and the population eventually saturates, producing a characteristic S-shaped curve. A classic example is the rapid climb and then plateau of the number of people infected in an epidemic. Conversely, a population such as the uninfected sleds downward in a similar logistic curve. Three variables characterize the logistic model: the duration of the process (Dt), defined as the time required for the population to grow from 10 percent to 90 percent of its extent; the midpoint of the growth process, which fixes it in time and marks the peak rate of change; and the saturation or limiting size of the population. For each of the causes of death that we examine, we analyze this S-shaped “market penetration” (or withdrawal) and quantify the variables.

Biostatisticians have long recognized competing risks, and so our second model represents multi-species competition. Here causes of death compete with and, if fitter in an inclusively Darwinian sense, substitute for one another. Each cause grows, saturates, and declines, and in the process reduces or creates space for other causes within the overall niche. The growth and decline phases follow the S-shaped paths of the logistic law.

The domain of our analysis is the United States in the 20th century. We start systematically in the year 1900, because that is when reasonably reliable and complete U.S. time series on causes of death begin. Additionally, 1900 is a commencement because the relative importance of causes of death was rapidly and systematically changing. In earlier periods causes of death may have been in rough equilibrium, fluctuating but not systematically changing. In such periods, the logistic model would not apply. The National Center for Health Statistics and its predecessors collect the data analyzed, which are also published in volumes issued by the U.S. Bureau of the Census.[4]

The data present several problems. One is that the categories of causes of death are old, and some are crude. The categories bear some uncertainty. Alternative categories and clusters, such as genetic illnesses, might be defined for which data could be assembled. Areas of incomplete data, such as neonatal mortality, and omissions, such as fetal deaths, could be addressed. To complicate the analysis, some categories have been changed by the U.S. government statisticians since 1900, incorporating, for example, better knowledge of forms of cancer.

Other problems are that the causes of death may be unrecorded or recorded incorrectly. For a decreasing fraction of causes of death, no “modern” cause is assigned. We assume that the unassigned or “other” deaths, which were numerous until about 1930, do not bias the analysis of the remainder. That is, they would roughly pro-rate to the assigned causes. Similarly, we assume no systematic error in early records.

Furthermore, causes are sometimes multiple, though the death certificate requires that ultimately one basic cause be listed.[5] This rule may hide environmental causes. For example, infectious and parasitic diseases thrive in populations suffering drought and malnutrition. The selection rule dictates that only the infectious or parasitic disease be listed as the basic cause. For some communities or populations the bias could be significant, though not, we believe, for our macroscopic look at the 20th century United States.

The analysis treats all Americans as one population. Additional analyses could be carried out for subpopulations of various kinds and by age group.[6] Comparable analyses could be prepared for populations elsewhere in the world at various levels of economic development.[7]

With these cautions, history still emerges.

As a reference point, first observe the top 15 causes of death in America in 1900 (Table 1). These accounted for about 70 percent of the registered deaths. The remainder would include both a sprinkling of many other causes and some deaths that should have been assigned to the leading causes. Although heart disease already is the largest single cause of death in 1900, the infectious diseases dominate the standings.

Death took 1.3 million in the United States in 1900. In 1997 about 2.3 million succumbed. While the population of Americans more than tripled, deaths in America increased only 1.7 times because the death rate halved (Figure 1). As we shall see, early in the century the hunter microbes had better success.

Table 1. U.S. death rate per 100,000 population for leading causes, 1900. For source of data, see Note 4.

| Cause | Rate | Mode of Transmission | |

| 1. | Major Cardiovascular Disease | 345 | [N.A.] |

| 2. | Influenza, Pneumonia | 202 | Inhalation,Intimate Contact |

| 3. | Tuberculosis | 194 | Inhalation,Intimate Contact |

| 4. | Gastritis, Colitus,Enteritis, and Duodenitis | 142 | Contaminated Waterand Food |

| 5. | All Accidents | 72 | [Behavioral] |

| 6. | Malignant Neoplasms | 64 | [N.A.] |

| 7. | Diphtheria | 40 | Inhalation |

| 8. | Typhoid and ParatyphoidFever | 31 | Contaminated Water |

| 9. | Measles | 13 | Inhalation, Intimate Contact |

| 10. | Cirrhosis | 12 | [Behavioral] |

| 11. | Whooping Cough | 12 | Inhalation, Intimate Contact |

| 12. | Syphilis and Its Sequelae | 12 | Sexual Contact |

| 13. | Diabetes Mellitus | 11 | [N.A.] |

| 14. | Suicide | 10 | [Behavioral] |

| 15. | Scarlet Fever and Streptococcal Sore Throat | 9 | Inhalation, Intimate Contact |

DOSSIERS OF EIGHT KILLERS

Let us now review the histories of eight causes of death: typhoid, diphtheria, the gastrointestinal family, tuberculosis, pneumonia plus influenza, cardiovascular, cancer, and AIDS.

For each of these, we will see first how it competes against the sum of all other causes of death. In each figure we show the raw data, that is, the fraction of total deaths attributable to the killer, with a logistic curve fitted to the data. In an inset, we show the identical data in a transform that renders the S-shaped logistic curve linear.[8] It also normalizes the process of growth or decline to one (or to 100 percent). Thus, in the linear transform the fraction of deaths each cause garners, which is plotted on a semi-logarithmic scale, becomes the percent of its own peak level (taken as one hundred percent). The linear transform eases the comparison among cases and the identification of the duration and midpoint of the processes, but also compresses fluctuations.

Typhoid (Figure 2) is a systemic bacterial infection caused primarily by Salmonella typhi.[9] Mary Mallon, the cook (and asymptomatic carrier) popularly known as Typhoid Mary, was a major factor in empowering the New York City Department of Health at the turn of the century. Typhoid was still a significant killer in 1900, though spotty records show it peaked in the 1870s. In the 1890s, Walter Reed, William T. Sedgewick, and others determined the etiology of typhoid fever and confirmed its relation to sewage-polluted water. It took about 40 years to protect against typhoid, with 1914 the year of inflection or peak rate of decline.

Diphtheria (Figure 2) is an acute infectious disease caused by diphtheria toxin of the Corynebacterium diphtheriae. In Massachusetts, where the records extend back further than for the United States as a whole, diphtheria flared to 196 per 100,000 in 1876, or about 10 percent of all deaths. Like typhoid, diphtheria took 40 years to defense, centered in 1911. By the time the diphtheria vaccine was introduced in the early 1930s, 90 percent of its murderous career transition was complete.

Next comes the category of diseases of the gut (Figure 2). Deaths here are mostly attributed to acute dehydrating diarrhea, especially in children, but also to other bacterial infections such as botulism and various kinds of food poisoning. The most notorious culprit was the Vibrio cholerae. In 1833, while essayist Ralph Waldo Emerson was working on his book Nature, expounding the basic benevolence of the universe, a cholera pandemic killed 5 to 15 percent of the population in many American localities where the normal annual death rate from all causes was 2 or 3 percent.

In 1854 in London a physician and health investigator, John Snow, seized the idea of plotting the locations of cholera deaths on a map of the city. Most deaths occurred in St. James Parish, clustered about the Broad Street water pump. Snow discovered that cholera victims who lived outside the Parish also drew water from the pump. Although consumption of the infected water had already peaked, Snow’s famous removal of the pump handle properly fixed in the public mind the means of cholera transmission.[10] In the United States, the collapse of cholera and its relations took about 60 years, centered on 1913. As with typhoid and diphtheria, sanitary engineering and public health measures addressed most of the problem before modern medicine intervened with antibiotics in the 1940s.

In the late 1960s, deaths from gastrointestinal disease again fell sharply. The fall may indicate the widespread adoption of intravenous and oral rehydration therapies and perhaps new antibiotics. It may also reflect a change in record-keeping.

Tuberculosis (Figure 2) refers largely to the infectious disease of the lungs caused by Mycobacterium tuberculosis. In the 1860s and 1870s in Massachusetts, TB peaked at 375 deaths per 100,000, or about 15 percent of all deaths. Henry David Thoreau, author of Walden: or, Life in the Woods, died of bronchitis and tuberculosis at the age of 45 in 1862. TB took about 53 years to jail, centered in 1931. Again, the pharmacopoeia entered the battle rather late. The multi-drug therapies became effective only in the 1950s.

Pneumonia and influenza are combined in Figure 3. They may comprise the least satisfactory category, mixing viral and bacterial aggressors. Figure 3 includes Influenza A, the frequently mutating RNA virus believed to have induced the Great Pandemic of 1918-1919 following World War I, when flu seized about a third of all corpses in the United States. Pneumonia and influenza were on the loose until the 1930s. Then, in 17 years centered on 1940 the lethality of pneumonia and influenza tumbled to a plateau where “flu” has remained irrepressibly for a half century.

Now we shift from pathogens to a couple of other major killers. Major cardiovascular diseases, including heart disease, hypertension, cerebrovascular diseases, atherosclerosis, and associated renal diseases display their triumphal climb and incipient decline in Figure 3. In 1960, about 55 percent of all fatal attacks were against the heart and its allies, culminating a 60-year climb. Having lost 14 points of market share in the past 40 years, cardiovascular disease looks vulnerable. Other paths descend quickly, once they bend downward. We predict an 80-year drop to about 20 percent of American dead. Cardiovascular disease is ripe for treatment through behavioral change and medicine.

A century of unremitting gains for malignant neoplasms appears neatly in Figure 3. According to Ames et al., the culprits are ultimately the DNA-damaging oxidants.[11] One might argue caution in lumping together lung, stomach, breast, prostate, and other cancers. Lung and the other cancers associated with smoking account for much of the rising slope. However, the cancers whose occurrence has remained constant are also winning share if other causes of death diminish. In the 1990s the death rate from malignancies flattened, but the few years do not yet suffice to make a trend. According to the model, cancer’s rise should last 160 years and at peak account for 40 percent of American deaths.

The spoils of AIDS, a meteoric viral entrant, are charted in Figure 3. The span of data for AIDS is short, and the data plotted here may not be reliable. Pneumonia and other causes of death may mask AIDS’ toll. Still, this analysis suggests AIDS reached its peak market of about 2 percent of deaths in the year 1995. Uniquely, the AIDS trajectory suggests medicine sharply blocked a deadly career, stopping it about 60% of the way toward its project fulfillment.

Now look at the eight causes of death as if it were open hunting season for all (Figure 4). Shares of the hunt changed dramatically, and fewer hunters can still shoot to kill with regularity. We can speculate why.

BY WATER, BY AIR

First, consider what we label the aquatic kills: a combination of typhoid and the gastrointestinal family. They cohere visually and phase down by a factor of ten over 33 years centered on 1919 (Figure 5).

Until well into the 19th century, towndwellers drew their water from local ponds, streams, cisterns, and wells.[12] They disposed of the wastewater from cleaning, cooking, and washing by throwing it on the ground, into a gutter, or a cesspool lined with broken stones. Human wastes went to privy vaults, shallow holes lined with brick or stone, close to home, sometimes in the cellar. In 1829 residents of New York City deposited about 100 tons of excrement each day in the city soil. Scavengers collected the “night soil” in carts and dumped it nearby, often in streams and rivers.

Between 1850 and 1900 the share of the American population living in towns grew from about 15 to about 40 percent. The number of cities over 50,000 grew from 10 to more than 50. Increasing urban density made waste collection systems less adequate. Overflowing privies and cesspools filled alleys and yards with stagnant water and fecal wastes. The growing availability of piped-in water created further stress. More water was needed for fighting fires, for new industries that required pure and constant water supply, and for flushing streets. To the extent they existed, underground sewers were designed more for storm water than wastes. One could not design a more supportive environment for typhoid, cholera, and other water-borne killers.

By 1900 towns were building systems to treat their water and sewage. Financing and constructing the needed infrastructure took several decades. By 1940 the combination of water filtration, chlorination, and sewage treatment stopped most of the aquatic killers.

Refrigeration in homes, shops, trucks, and railroad boxcars took care of much of the rest. The chlorofluorocarbons (CFCs) condemned today for thinning the ozone layer were introduced in the early 1930s as a safer and more effective substitute for ammonia in refrigerators. The ammonia devices tended to explode. If thousands of Americans still died of gastrointestinal diseases or were blown away by ammonia, we might hesitate to ban CFCs.

Let us move now from the water to the air (Figure 6). “Aerial” groups all deaths from influenza and pneumonia, TB, diphtheria, measles, whooping cough, and scarlet fever and other streptococcal diseases. Broadly speaking these travel by air. To a considerable extent they are diseases of crowding and unfavorable living and working conditions.

Collectively, the aerial diseases were about three times as deadly to Americans as their aquatic brethren in 1900. Their breakdown began more than a decade later and required almost 40 years.

The decline could be decomposed into several sources. Certainly large credit goes to improvements in the built environment: replacement of tenements and sweatshops with more spacious and better ventilated homes and workplaces. Huddled masses breathed free. Much credit goes to electricity and cleaner energy systems at the level of the end user.

Reduced exposure to infection may be an unrecognized benefit of shifting from mass transit to personal vehicles. Credit obviously is also due to nutrition, public health measures, and medical treatments.

The aerial killers have kept their market share stable since the mid-1950s. Their persistence associates with poverty; crowded environments such as schoolrooms and prisons; and the intractability of viral diseases. Mass defense is more difficult. Even the poorest Bostonians or Angelenos receive safe drinking water; for the air, there is no equivalent to chlorination.

Many aerial attacks occurred in winter, when indoor crowding is greatest. Many aquatic kills were during summer, when the organic fermenters were speediest. Diarrhea was called the summer complaint. In Chicago between 1867 and 1925 a phase shift occurred in the peak incidence of mortality from the summer to the winter months.[13] In America and other temperate zone industrialized countries, the annual mortality curve has flattened during this century as the human environment has come under control. In these countries, most of the faces of death are no longer seasonal.

BY WAR, BY CHANCE?

Let us address briefly the question of where war and accidents fit. In our context we care about war because disputed control of natural resources such as oil and water can cause war. Furthermore, war leaves a legacy of degraded environment and poverty where pathogens find prey. We saw the extraordinary spike of the flu pandemic of 1918-1919.

War functions as a short-lived and sometimes intense epidemic. In this century, the most intense war in the developed countries may have been in France between 1914-1918, when about one-quarter of all deaths were associated with arms.[14] The peak of 20th century war deaths in the United States occurred between 1941-1945 when about 7 percent of all deaths were in military service, slightly exceeding pneumonia and influenza in those years.

Accidents, which include traffic, falls, drowning, and fire follow a dual logic. Observe the shares of auto and all other accidents in the total kills in the United States during this century (Figure 7). Like most diseases, fatal non-auto accidents have dropped, in this case rather linearly from about 6 percent to about 2 percent of all fatalities. Smiths and miners faced more dangers than office workers. The fall also reflects lessening loss of life from environmental hazards such as floods, storms, and heat waves.

Auto accidents do not appear accidental at all but under perfect social control. On the roads, we appear to tolerate a certain range of risk and regulate accordingly, an example of so-called risk homeostasis.[15] The share of killing by auto has fluctuated around 2 percent since about 1930, carefully maintained by numerous changes in vehicles, traffic management, driving habits, driver education, and penalties.

DEADLY ORDER

Let us return to the main story. Infectious diseases scourged the 19th century. In Massachusetts in 1872, one of the worst plague years, five infectious diseases, tuberculosis, diphtheria, typhoid, measles, and smallpox, alone accounted for 27 percent of all deaths. Infectious diseases thrived in the environment of the industrial revolution’s new towns and cities, which grew without modern sanitation.

Infectious diseases, of course, are not peculiarly diseases of industrialization. In England during the intermittent plagues between 1348-1374 half or more of all mortality may have been attributable to the Black Death.[16] The invasion of smallpox into Central Mexico at the time of the Spanish conquest depopulated central Mexico.[17] Gonorrhea depopulated the Pacific island of Yap.[18]

At the time of its founding in 1901, our institution, the Rockefeller Institute for Medical Research as it was then called, appropriately focused on the infectious diseases. Prosperity, improvements in environmental quality, and science diminished the fatal power of the infectious diseases by an order of magnitude in the United States in the first three to four decades of this century. Modern medicine has kept the lid on.[19]

If infections were the killers of reckless 19th century urbanization, cardiovascular diseases were the killers of 20th century modernization. While avoiding the subway in your auto may have reduced the chance of influenza, it increased the risk of heart disease. Traditionally populations fatten when they change to a “modern” lifestyle. When Samoans migrate to Hawaii and San Francisco or live a relatively affluent life in American Samoa, they gain between 10 and 30 kg.[20]

The environment of cardiovascular death is not the Broad Street pump but offices, restaurants, and cars. So, heart disease and stroke appropriately roared to the lead in the 1920s.

Since the 1950s, however, cardiovascular disease has steadily lost ground to a more indefatigable terminator, cancer. In our calculation, cancer passed infection for the #2 spot in 1945. Americans appear to have felt the change. In that year Alfred P. Sloan and Charles Kettering channeled some of the fortune they had amassed in building the General Motors Corporation to found the Sloan-Kettering Cancer Research Center.

Though cancer trailed cardiovascular in 1997 by 41 to 23 percent, cancer should take over as the nation’s #1 killer by 2015, if long-run dynamics continue as usual (Figure 8). The main reasons are not environmental. Doll and Peto estimate that only about 5 percent of U.S. cancer deaths are attributable to environmental pollution and geophysical factors such as background radiation and sunlight.[21]

The major proximate causes of current forms of cancer, particularly tobacco smoke and dietary imbalances, can be reduced. But if Ames and others are right that cancer is a degenerative disease of aging, no miracle drugs should be expected, and one form of cancer will succeed another, assuring it a long stay at the top of the most wanted list. In the competition among the three major families of death, cardiovascular will have held first place for almost 100 years, from 1920 to 2015.

Will a new competitor enter the hunt? As various voices have warned, the most likely suspect is an old one, infectious disease.[22] Growth of antibiotic resistance may signal re-emergence. Also, humanity may be creating new environments, for example, in hospitals, where infection will again flourish. Massive population fluxes over great distances test immune systems with new exposures. Human immune systems may themselves weaken, as children grow in sterile apartments rather than barnyards.[23] Probably most important, a very large number of elderly offer weak defense against infections, as age-adjusted studies could confirm and quantify. So, we tentatively but logically and consistently project a second wave of infectious disease. In Figure 9 we aggregate all major infectious killers, both bacterial and viral. The category thus includes not only the aquatics and aerials discussed earlier, but also septicemia, syphilis, and AIDS.[24] A grand and orderly succession emerges.

SUMMARY

Historical examination of causes of death shows that lethality may evolve in consistent and predictable ways as the human environment comes under control. In the United States during the 20th century infections became less deadly, while heart disease grew dominant, followed by cancer. Logistic models of growth and multi-species competition in which the causes of death are the competitors describe precisely the evolutionary success of the killers, as seen in the dossiers of typhoid, diphtheria, the gastrointestinal family, pneumonia/influenza, cardiovascular disease, and cancer. Improvements in water supply and other aspects of the environment provided the cardinal defenses against infection. Environmental strategies appear less powerful for deferring the likely future causes of death. Cancer will overtake heart disease as the leading U.S. killer around the year 2015 and infections will gradually regain their fatal edge. If the orderly history of death continues.

FIGURES

Figure 1. Crude Death Rate: U.S. 1900-1997. Sources of data: Note 4.

Figure 2a. Typhoid and Paratyphoid Fever as a Fraction of All Deaths: U.S. 1900-1952. The larger panel shows the raw data and a logistic curve fitted to the data. The inset panel shows the same data and a transform that renders the S-shaped curve linear and normalizes the process to 1. “F” refers to the fraction of the process completed. Here the time it takes the process to go from 10 percent to 90 percent of its extent is 39 years, and the midpoint is the year 1914. Source of data: Note 4.

Figure 2b. Diphtheria as a Fraction of All Deaths: U.S. 1900-1956. Source of data: Note 4.

Figure 2c. Gastritis, Duodenitis, Enteritis, and Colitis as a Fraction of All Deaths: U.S. 1900-1970. Source of data: Note 4.

Figure 2d. Tuberculosis, All Forms, as a Fraction of All Deaths: U.S. 1900-1997. Sources of data: Note 4.

Figure 3a. Pneumonia and Influenza as a Fraction of All Deaths: U.S. 1900-1997. Note the extraordinary pandemic of 1918-1919. Sources of data: Note 4.

Figure 3b. Major Cardiovascular Diseases as a Fraction of All Deaths: U.S. 1900-1997. In the inset, the curve is decomposed into upward and downward logistics which sum to the actual data values. The midpoint of the 60-year rise of cardiovascular disease was the year 1939, while the year 1983 marked the midpoint of its 80-year decline. Sources of data: Note 4.

Figure 3c. Malignant Neoplasms as a Fraction of All Deaths: U.S. 1900-1997. Sources of data: Note 4.

Figure 3d. AIDS as a Fraction of All Deaths: U.S. 1981-1997. Sources of data: Note 4.

Figure 4. Comparative Trajectories of Eight Killers: U.S. 1900-1997. The scale is logarithmic, with fraction of all deaths shown on the left scale with the equivalent percentages marked on the right scale. Sources of data: Note 4.

Figure 5. Deaths from Aquatically Transmitted Diseases as a Fraction of All Deaths: U.S. 1900-1967. Superimposed is the percentage of homes with water and sewage service (right scale). Source of data: Note 4.

Figure 6. Deaths from Aerially Transmitted Diseases as a Fraction of All Deaths: U.S. 1900-1997. Sources of data: Note 4.

Figure 7. Motor Vehicle and All Other Accidents as a Fraction of All Deaths: U.S. 1900-1997. Sources of data: Note 4.

Figure 8. Major Cardiovascular Diseases and Malignant Neoplasms as a Fraction of All U.S. Deaths: 1900-1997. The logistic model predicts (dashed lines) Neoplastic will overtake Cardiovascular as the number one killer in 2015. Sources of data: Note 4.

Figure 9. Major Causes of Death Analyzed with a Multi-species Model of Logistic Competition. The fractional shares are plotted on a logarithmic scale which makes linear the S-shaped rise and fall of market shares.

Notes

[1] On the basic model see: Kingsland SE. Modeling Nature: Episodes in the History of Population Ecology. Chicago: University of Chicago Press, 1985. Meyer PS. Bi-logistic growth. Technological Forecasting and Social Change 1994;47:89-102.

[2] On the model of multi-species competition see Meyer PS, Yung JW, Ausubel JH. A Primer on logistic growth and substitution: the mathematics of the Loglet Lab software. Technological Forecasting and Social Change 1999;61(3):247-271.

[3] Marchetti C. Killer stories: a system exploration in mortal disease. PP-82-007. Laxenburg, Austria: International Institute for Applied Systems Analysis, 1982. For a general review of applications see: Nakicenovic N, Gruebler A, eds. Diffusion of Technologies and Social Behavior. New York: Springer-Verlag, 1991.

[4] U.S. Bureau of the Census, Historical Statistics of the United States: Colonial Times to 1970, Bicentennial Editions, Parts 1 & 2. Washington DC: U.S. Bureau of the Census: 1975. U.S. Bureau of the Census, Statistical Abstract of the United States: 1999 (119th edition). Washington DC: 1999, and earlier editions in this annual series.

[5] Deaths worldwide are assigned a “basic cause” through the use of the “Rules for the Selection of Basic Cause” stated in the Ninth Revision of the International Classification of Diseases. Geneva: World Health Organization. These selection rules are applied when more than one cause of death appears on the death certificate, a fairly common occurrence. From an environmental perspective, the rules are significantly biased toward a medical view. In analyzing causes of death in developing countries and poor communities, the rules can be particularly. For general discussion of such matters see Kastenbaum R, Kastenbaum B. Encyclopedia of Death. New York: Avon, 1993.

[6] For discussion of the relation of causes of death to the age structure of populations see Hutchinson GE. An Introduction to Population Ecology. New Haven: Yale University Press, 1978, 41-89. See also Zopf PE Jr. Mortality Patterns and Trends in the United States. Westport CT: Greenwood, 1992.

[7] Bozzo SR, Robinson CV, Hamilton LD. The use of a mortality-ratio matrix as a health index.” BNL Report No. 30747. Upton NY: Brookhaven National Laboratory, 1981.

[8] For explanation of the linear transform, see Fisher JC, Pry RH. A simple substitution model of technological change. Technological Forecasting and Social Change 1971;3:75-88.

[9] For reviews of all the bacterial infections discussed in this paper see: Evans AS, Brachman PS, eds., Bacterial Infections of Humans: Epidemiology and Control. New York: Plenum, ed. 2, 1991. For discussion of viral as well as bacterial threats see: Lederberg J, Shope RE, Oaks SC Jr., eds., Emerging Infections: Microbial Threats to Health in the United States. Washington DC: National Academy Press, 1992. See also Kenneth F. Kiple, ed., The Cambridge World History of Disease. Cambridge UK: Cambridge Univ. Press, 1993.

[10] For precise exposition of Snow’s role, see Tufte ER. Visual Explanations: Images and Quantities, Evidence and Narrative. Cheshire CT: Graphics Press, 1997:27-37.

[11] Ames BN, Gold LS. Chemical Carcinogens: Too Many Rodent Carcinogens. Proceedings of the National Academy of Sciences of the U.S.A. 1987;87:7772-7776.

[12] Tarr JA. The Search for the Ultimate Sink: Urban Pollution in Historical Perspective. Akron OH: University of Akron Press, 1996.

[13] Weihe WH. Climate, health and disease. Proceedings of the World Climate Conference. Geneva: World Meteorological Organization, 1979.

[14] Mitchell BR. European Historical Statistics 1750-1975. New York: Facts on File, 1980:ed. 2.

[15] Adams JGU., Risk homeostasis and the purpose of safety regulation. Ergonomics 1988;31:407-428.

[16] Russell JC. British Medieval Population. Albuquerque NM: Univ. of New Mexico, 1948.

[17] del Castillo BD. The Discovery and Conquest of Mexico, 1517-1521. New York: Grove, 1956.

[18] Hunt EE Jr. In Health and the Human Condition: Perspectives on Medical Anthropology. Logan MH, Hunt EE,eds. North Scituate, MA: Duxbury, 1978.

[19] For perspectives on the relative roles of public health and medical measures see Dubos R. Mirage of Health: Utopias, Progress, and Biological Change. New York: Harper, 1959. McKeown T, Record RG, Turner RD. An interpretation of the decline of mortality in England and Wales during the twentieth century,” Population Studies 1975;29:391-422. McKinlay JB, McKinlay SM. The questionable contribution of medical measures to the decline of mortality in the United States in the twentieth century.” Milbank Quarterly on Health and Society Summer 1977:405-428.¥r¥r

[20] Pawson IG, Janes, C. Massive obesity in a migrant Samoan population. American Journal of Public Health 1981;71:508-513.

[21] Doll R, Peto R. The Causes of Cancer. New York: Oxford University Press, 1981.

[22] Lederberg J, Shope RE, Oaks SC Jr., eds. Emerging Infections: Microbial Threats to Health in the United States. Washington DC: National Academy, 1992. Ewald PW. Evolution of Infectious Disease. New York: Oxford, 1994.

[23] Holgate ST, The epidemics of allergy and asthma. Nature 1999;402supp:B2-B4.

[24] The most significant present (1997) causes of death subsumed under “all causes” and not represented separately in Figure 9 are chronic obstructive pulmonary diseases (4.7%), accidents (3.9%), diabetes mellitus (2.6%), suicide (1.3%), chronic liver disease and cirrhosis (1.0%), and homicide (0.8%). The dynamic in the figure remains the same when these causes are included in the analysis. In our logic, airborne and other allergens, which cause some of the pulmonary deaths, might also be grouped with infections, although the invading agents are not bacteria or viruses.

Maglevs and the Vision of St. Hubert

1. Introduction

The emblems of my essay are maglevs speeding through tunnels below the earth and a crucifix glowing between the antlers of a stag, the vision of St. Hubert. Propelled by magnets, maglev trains levitate passengers with green mobility. Maglevs symbolize technology, while the fellowship of St. Hubert with other animals symbolizes behavior.

Better technology and behavior can do much to spare and restore Nature during the 21st century, even as more numerous humans prosper.

In this essay I explore the areas in human use for fishing, farming, logging, and cities. Offsetting the sprawl of cities, rising yields in farms and forests and changing tastes can spare wide expanses of land. Shifting from hunting seas to farming fish can similarly spare Nature. I will conclude that cardinal resolutions to census marine life, lift crop yields, increase forest area, and tunnel for maglevs would firmly promote the Great Restoration of Nature on land and in the sea. First, let me share the vision of St. Hubert.

2. The Vision of St. Hubert

In The Hague, about the year 1650, a 25 year-old Dutch artist, Paulus Potter, painted a multi-paneled picture that graphically expresses contemporary emotions about the environment.[i] Potter named his picture “The Life of the Hunter” (Figure 1). The upper left panel establishes the message of the picture with reference to the legend of the vision of St. Hubert.[ii] Around the year 700, Hubert, a Frankish courtier, hunted deep in the Ardennes forest on Good Friday, a Christian spring holy day. A stag appeared before Hubert with a crucifix glowing between its antlers, and a heavenly voice reproached him for hunting, particularly on Good Friday. Hubert’s aim faltered, and he renounced his bow and arrow. He also renounced his riches and military honors, and became a priest in Maastricht.

The upper middle panel, in contrast, shows a hunter with two hounds. Seven panels on the sides and bottom show the hunter and his servant hounds targeting other animals: rabbit, wolf, bull, lion, wild boar, bear, and mountain goat. The hunter’s technologies include sword, bow, and guns .

One panel on either side recognizes consciousness, in fact, self-consciousness, in our fellow animals. In the middle on the right, a leopard marvels at its reflection in a mirror. On the lower left apes play with their self-images in a shiny plate.

In the large central panels Potter judges 17th century hunters. First, in the upper panel the man and his hounds come before a court of the animals they have hunted. In the lower central, final panel the animal jury celebrates uproariously, while the wolf, rabbit, and monkey cooperate to hang the hunter’s dogs as an elephant, goat, and bear roast the hunter himself. Paulus Potter believed the stag’s glowing cross converted St. Hubert to sustainability. The hunter remained unreconstructed.

With Paulus and Hubert, we can agree on the vision of a planet teeming with life, a Great Restoration of Nature. And most would agree we need ways to accommodate the billions more humans likely to arrive while simultaneously lifting humanity’s standard of living. In the end, two means exist to achieve the Great Restoration. St. Hubert exemplifies one, behavioral change. The hunter’s primitive weapons hint at the second, technology. What can we expect from each? First, some words about behavior.

3. Our Triune Brain

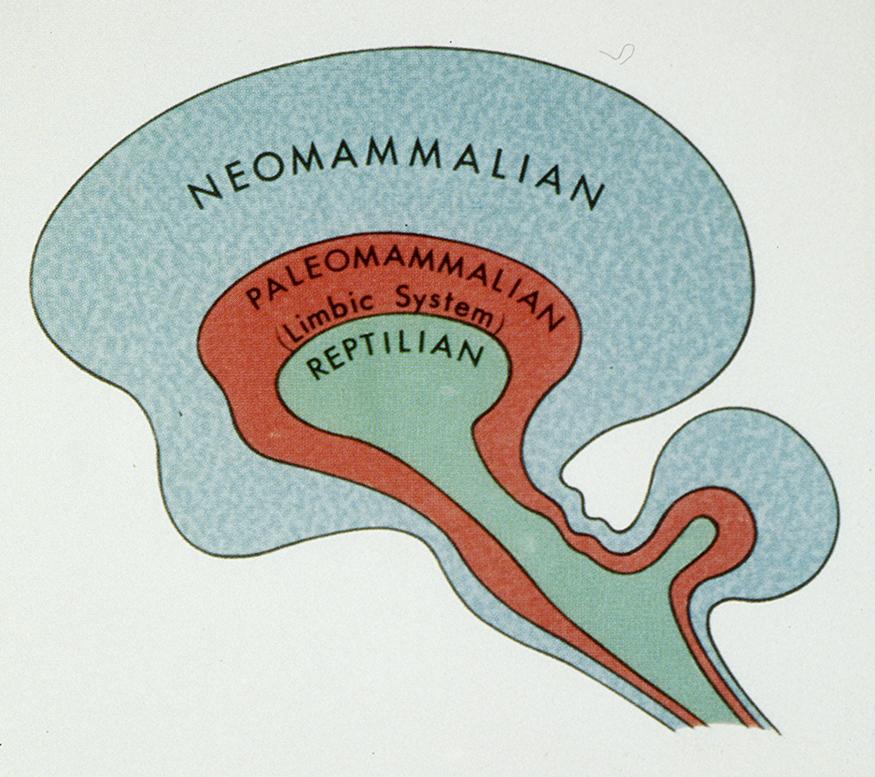

In a fundamental 1990 book, The Triune Brain in Evolution, neuroscientist Paul MacLean explained that humans have three brains, each developed during a stage of evolution.[iii] The earliest, found in snakes, MacLean calls the reptilian brain (Figure 2). In mammals another brain appeared, the paleomammalian, bringing such new behavior as care of the young and mutual grooming. In humans came the most recent evolutionary structure, the hugely expanded neocortex. This neomammalian brain brought language, visualization, and symbolic skills. But conservative evolution did not replace the reptilian brain, it added. Thus, we share primal behavior with other animals, including snakes. The reptilian brain controls courting mates, patrolling territory, dominating submissives, and flocking together. The reptilian brain makes most of the sensational news and will not retreat. Our brains and thus our basic instincts and behaviors have remained largely unchanged for a million years or more. They will not change on time scales considered for “sustainable development.”

Of course, innovations may occur that control individual and social behavior. Law and religion both try, though the snake brain keeps reasserting itself, on Wall Street, in the Balkans, and clawing for Nobel prizes in Stockholm.

Pharmacology also tries for behavioral control, with increasing success. Having penetrated only perhaps 10% of their global market, sales of new “anti-depressants,” mostly tinkering with serotonin in the brain, neared $10 billion in 2000. Drugs can surely make humans very happy, but without restoring Nature.

Because, I believe, behavioral sanctions will be hard-pressed to control the eight or ten billion snake brains persisting in humanity, we should use our hugely expanded neocortex on technology that allows us to tread lightly on Earth. Since ever, homo faber has been trying to make things better and to make better things. During the past two centuries we have become more systematic and aggressive about it, through the diffusion of research & development and the institutions that perform them, including corporations and universities.

What can behavior and technology do to spare and restore Nature during the 21st century? Let’s consider the seas and then the land.

4. Sparing sea life

St. Hubert exemplifies behavior to spare land’s animals. Many thousands of years ago our ancestors sharpened sticks and began hunting. They probably extinguished a few species, such as woolly mammoths, and had they kept on hunting, they might have extinguished many more. Then without waiting on St Hubert, our ancestors ten thousand years ago began sparing land animals in Nature by domesticating cows, pigs, goats, and sheep. By herding rather than hunting animals, humans began a technology to spare wild animals — on land.

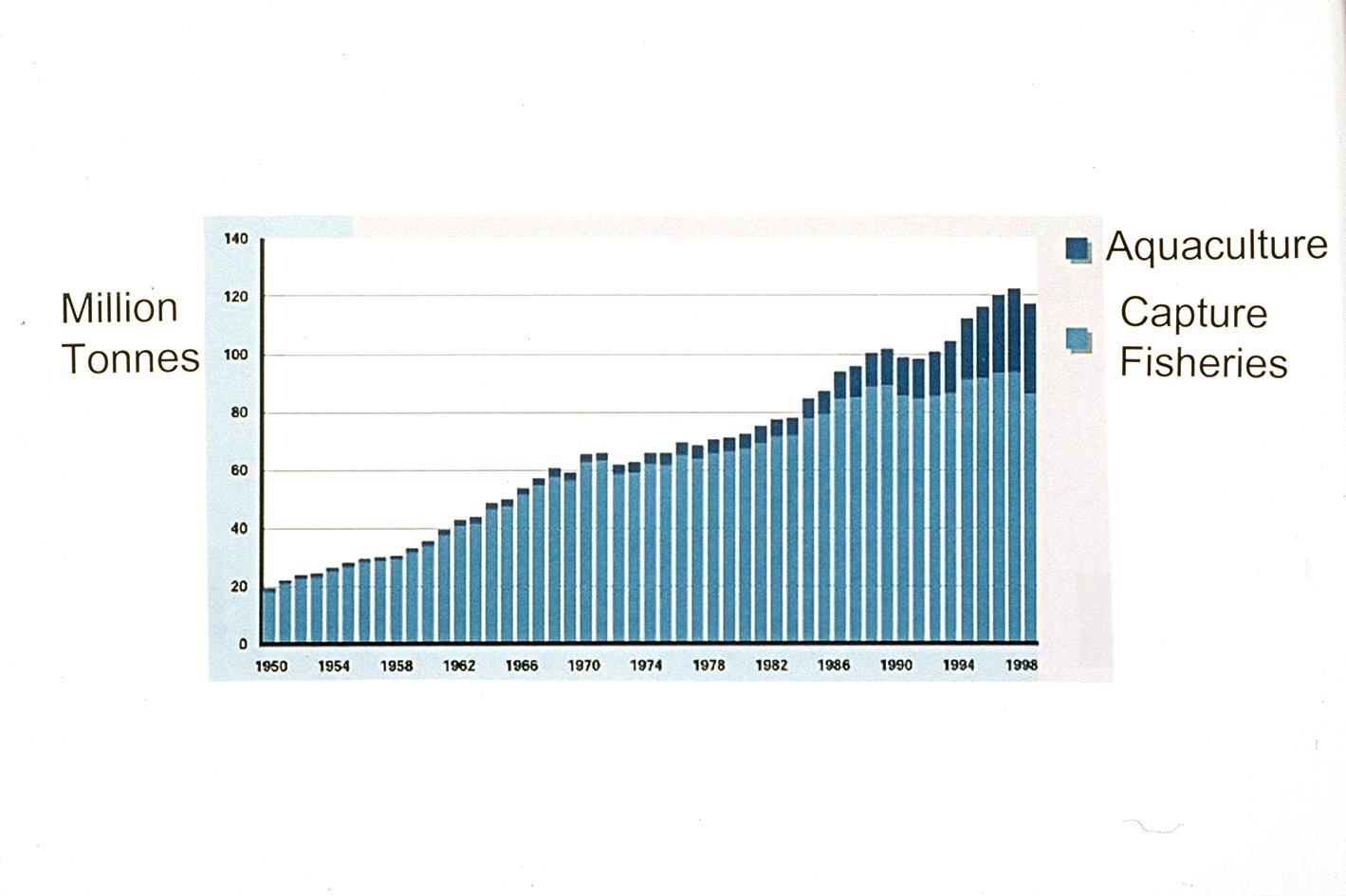

In 2001 about 90 million tons of fish are being taken wild from the sea and 30 from fish farms and ranches. Sadly, little reliable information quantifies the diversity, distribution, and abundance of life in the sea, but many anecdotes suggest large, degrading changes. In any case, the ancient sparing of land animals by farming shows us an effective way to spare the fish in the sea. We need to raise the share we farm and lower the share we catch. Other human activities, such as urbanization of coastlines and tampering with the climate, disturb the seas, but today fishing matters most. Compare an ocean before and after heavy fishing.

Fish farming does not require invention. It has been around for a long time. For centuries, the Chinese have been doing very nicely raising herbivores, such as carp.

Following the Chinese example, one feeds crops grown on land by farmers to herbivorous fish in ponds. Much aquaculture of carp and tilapia in Southeast Asia and the Philippines and of catfish near the Gulf Coast of the USA takes this form. The fish grown in the ponds spare fish from the ocean. Like poultry, fish efficiently convert protein in feed to protein in meat. And because the fish do not have to stand, they convert calories in feed into meat even more efficiently than poultry. All the improvements such as breeding and disease control that have made poultry production more efficient can be and have been applied to aquaculture, improving the conversion of feed to meat and sparing wild fish.[iv] With due care for effluents and pathogens, this model can multiply many times in tonnage.

A riskier and fascinating alternative, ocean farming, would actually lift life in the oceans.[v] The oceans vary vastly in their present productivity. In parts of the ocean crystal clear water enables a person to see 50 meters down. These are deserts. In a few garden areas, where one can see only a meter or so, life abounds. Water rich in iron, phosphorus, trace metals, silica, and nitrate makes these gardens dense with plants and animals. The experiments for marine sequestration of carbon demonstrate the extraordinary leverage of iron to make the oceans bloom.

Adding the right nutrients in the right places might lift fish yields by a factor of hundreds. Challenges abound because the ocean moves and mixes, both vertically and horizontally. Nevertheless, technically and economically promising proposals exist for farming on a large scale in the open ocean with fertilization in deep water. One kg of buoyant fertilizer, mainly iron with some phosphate, could produce a few thousand tons of biomass.[vi]

Improving the fishes’ pasture of marine plants is the crucial first step to greater productivity. Zooplankton then graze on phytoplankton, and the food chain continues until the sea teems with diverse life. Fertilizing 250,000 sq km of barren tropical ocean, the size of the USA state of Colorado, in principle might produce a catch matching today’s fish market of 100 million tons. Colorado spreads less than 1/10th of 1% as wide as the world ocean.

The point is that the today’s depleting harvest of wild fishes and destruction of marine habitat to capture them need not continue. The 25% of seafood already raised by aquaculture signals the potential for Restoration (Figure 3). Following the example of farmers who spare land and wildlife by raising yields on land, we can concentrate our fishing in highly productive, closed systems on land and in a few highly productive ocean farms. Humanity can act to restore the seas, and thus also preserve traditional fishing where communities value it. With smart aquaculture, we can multiply life in the oceans while feeding humanity and restoring Nature. St. Hubert, of course, might improve the marine prospect by not eating fellow creatures from the sea.

5. Sparing farmland

What about sparing nature on land? How much must our farming, logging, and cities take?

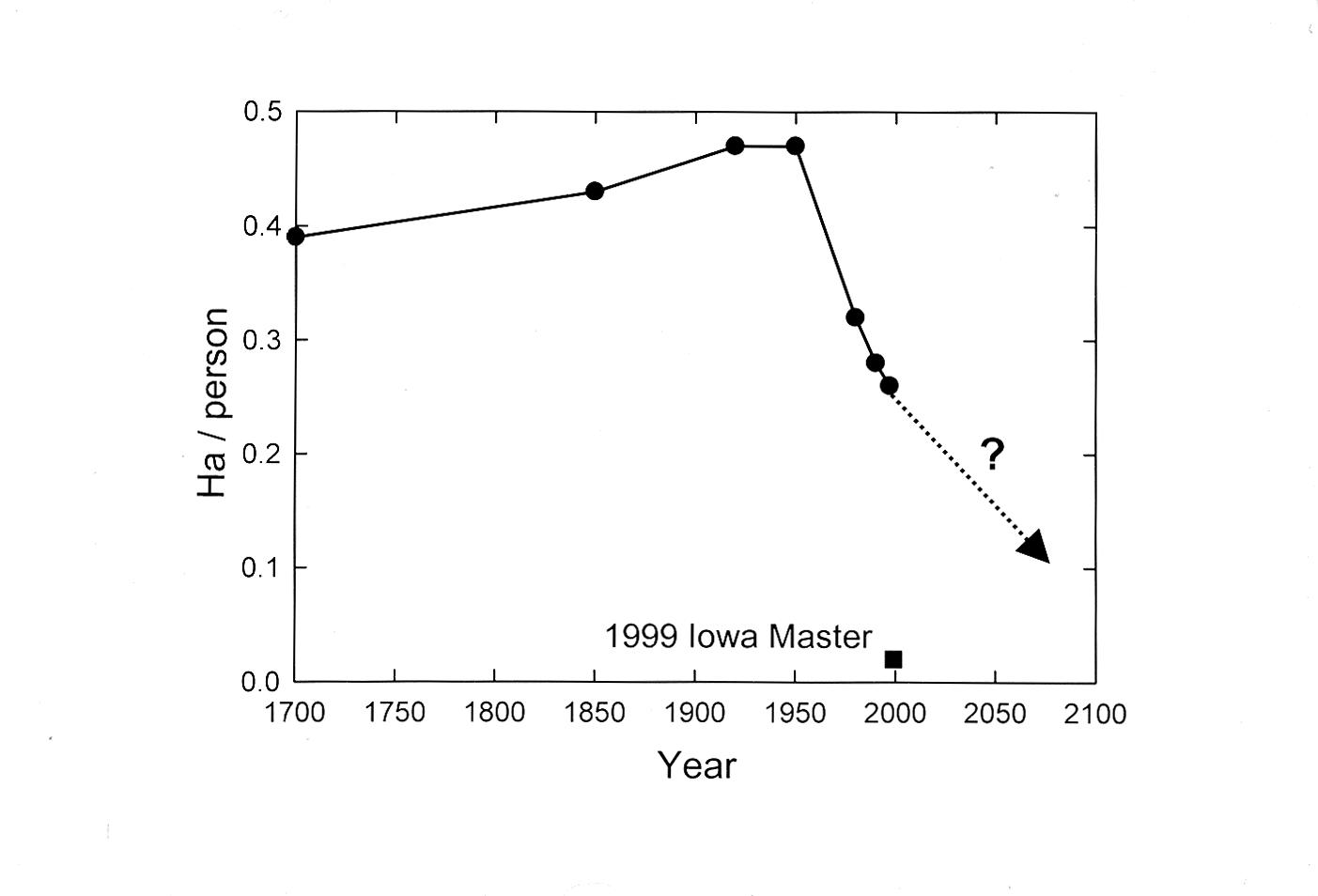

First, can we spare land for nature while producing our food? [vii] Yields per hectare measure the productivity of land and the efficiency of land use. For centuries land cropped expanded faster than population, and cropland per person rose as people sought more proteins and calories. Fifty years ago farmers stopped plowing up nature (Figure 4). During the past half-century, ratios of crops to land for the world’s major grains-corn, rice, soybean, and wheat-have climbed fast on all six of the farm continents. Between 1972-1995 Chinese cereal yields rose 3.3% per year per hectare. Per hectare, the global Food Index of the Food and Agriculture Organization of the UN, which reflects both quantity and quality of food, has risen 2.3% annually since 1960. In the USA in 1900 the protein or calories raised on one Iowa hectare fed four people for the year. In 2000 a hectare on the Iowa farm of master grower Mr. Francis Childs could feed eighty people for the year.

Since the middle of the 20th century, such productivity gains have stabilized global cropland, and allowed reductions of cropland in many nations, including China. Meanwhile, growth in the world’s food supply has continued to outpace population, including in poor countries. A cluster of innovations including tractors, seeds, chemicals, and irrigation, joined through timely information flows and better organized markets, raised the yields to feed billions more without clearing new fields. We have decoupled food from acreage.

High-yield agriculture need not tarnish the land. Precision agriculture is the key. This approach to farming relies on technology and information to help the grower prescribe and deliver precise inputs of fertilizer, pesticides, seed, and water exactly where they are needed. We had two revolutions in agriculture in the 20th century. First, the tractors of mechanical engineers saved the oats that horses ate and multiplied the power of labor. Then chemical engineers and plant breeders made more productive plants. The present agricultural revolution comes from information engineers. What do the past and future agricultural revolutions mean for land?

To produce their present crop of wheat, Indian farmers would need to farm more than three times as much land today as they actually do, if their yields had remained at their 1966 level. Let me offer a second comparison: a USA city of 500,000 people in 2000 and a USA city of 500,000 people with the 2000 diet but the yields of 1920. Farming as Americans did 80 years ago while eating as Americans do now would require 4 times as much land for the city, about 450,000 hectares instead of 110,000.

What can we look forward to globally? The agricultural production frontier remains spacious. On the same area, the average world farmer grows only about 20 percent of the corn of the top Iowa farmer, and the average Iowa farmer lags more than 30 years behind the state-of-the-art of his most productive neighbor. On average the world corn farmer has been making the greatest annual percentage improvement. If during the next 60 to 70 years, the world farmer reaches the average yield of today’s USA corn grower, the ten billion people then likely to live on Earth will need only half of today’s cropland. This will happen if farmers maintain on average the yearly 2% worldwide growth per hectare of the Food Index achieved since 1960, in other words, if dynamics, social learning, continues as usual. Even if the rate falls to 1%, an area the size of India, globally, could revert from agriculture to woodland or other uses. Averaging an improvement of 2% per year in the productivity and efficiency of natural resource use may be a useful operational definition of sustainability.

Importantly, as Hubert would note, a vegetarian diet of 3,000 primary calories per day halves the difficulty or doubles the land spared. Hubert might also observe that eating from a salad bar is like taking a sport utility vehicle to a gasoline filling station. Living on crisp lettuce, which offers almost no protein or calories, demands many times the energy of a simple rice-and-beans vegan diet.[viii] Hubert would wonder at the greenhouses of the Benelux countries glowing year round day and night. I will trust more in the technical advance of farmers than in behavioral change by eaters. The snake brain is usually a gourmet and a gourmand.

Fortunately, lifting yields while minimizing environmental fall out, farmers can effect the Great Restoration.

6. Sparing forests

Farmers may no longer pose much threat to nature. What about lumberjacks? As with food, the area of land needed for wood is a multiple of yield and diet, or the intensity of use of wood products in the economy, as well as population and income. Let’s focus on industrial wood — logs cut for lumber, plywood, and pulp for paper.

The wood “diet” required to nourish an economy is determined by the tastes and actions of consumers and by the efficiency with which millers transform virgin wood into useful products.[ix] Changing tastes and technological advances are already lightening pressure on forests. Concrete, steel, and plastics have replaced much of the wood once used in railroad ties, house walls, and flooring. Demand for lumber has become sluggish, and in the last decade world consumption of boards and plywood actually declined. Even the appetite for pulpwood, logs that end as sheets of paper and board, has leveled.

Meanwhile, more efficient lumber and paper milling is already carving more value from the trees we cut.[x] And recycling has helped close leaks in the paper cycle. In 1970, consumers recycled less than one-fifth of their paper; today, the world average is double that.

The wood products industry has learned to increase its revenue while moderating its consumption of trees. Demand for industrial wood, now about 1.5 billion cubic meters per year, has risen only 1% annually since 1960 while the world economy has multiplied at nearly four times that rate. If millers improve their efficiency, manufacturers deliver higher value through the better engineering of wood products, and consumers recycle and replace more, in 2050 virgin demand could be only about 2 billion cubic meters and thus permit reduction in the area of forests cut for lumber and paper.

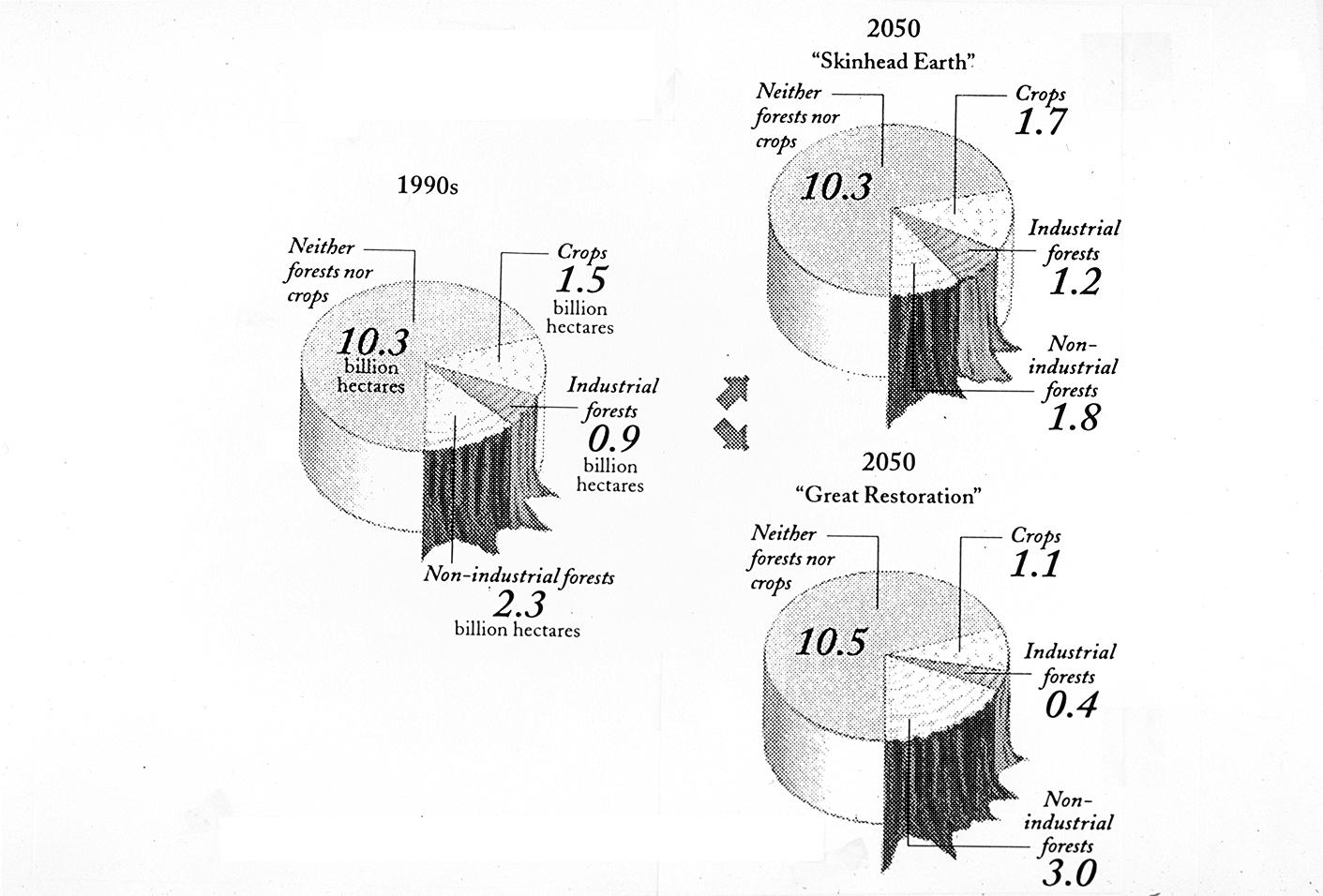

The permit, as with agriculture, comes from lifting yield. The cubic meters of wood grown per hectare of forest each year provide strong leverage for change. Historically, forestry has been a classic primary industry, as Hubert doubtless saw in the shrinking Ardennes. Like fishers and hunters, foresters have exhausted local resources and then moved on, returning only if trees regenerated on their own. Most of the world’s forests still deliver wood this way, with an average annual yield of perhaps two cubic meters of wood per hectare. If yield remains at that rate, by 2050 lumberjacks will regularly saw nearly half the world’s forests (Figure 5). That is a dismal vision — a chainsaw every other hectare, skinhead Earth.

Lifting yields, however, will spare more forests. Raising average yields 2 percent per year would lift growth over 5 cubic meters per hectare by 2050 and shrink production forests to just about 12 percent of all woodlands. Once again, high yields can afford a Great Restoration.

At likely planting rates, at least one billion cubic meters of wood — half the world’s supply — could come from plantations by the year 2050. Semi-natural forests — for example, those that regenerate naturally but are thinned for higher yield — could supply most of the rest. Small-scale traditional “community forestry” could also deliver a small fraction of industrial wood. Such arrangements, in which forest dwellers, often indigenous peoples, earn revenue from commercial timber, can provide essential protection to woodlands and their inhabitants.

More than a fifth of the world’s virgin wood is already produced from forests with yields above 7 m3 per hectare. Plantations in Brazil, Chile, and New Zealand can sustain yearly growth of more than 20 m3 meters per hectare with pine trees. In Brazil eucalyptus — a hardwood good for some papers — delivers more than 40 m3 per hectare. In the Pacific Northwest and British Columbia, with plentiful rainfall, hybrid poplars deliver 50 m3 per hectare.

Environmentalists worry that industrial plantations will deplete nutrients and water in the soil and produce a vulnerable monoculture of trees where a rich diversity of species should prevail. Meanwhile, advocates for indigenous peoples, who have witnessed the harm caused by crude industrial logging of natural forests, warn that plantations will dislocate forest dwellers and upset local economies. Pressure from these groups helps explain why the best practices in plantation forestry now stress the protection of environmental quality and human rights. As with most innovations, achieving the promise of high-yield forestry will require feedback from a watchful public.

The main benefit of the new approach to forests will reside in the natural habitat spared by more efficient forestry. An industry that draws from planted forests rather than cutting from the wild will disturb only one-fifth or less of the area for the same volume of wood. Instead of logging half the world’s forests, humanity can leave almost 90 % of them minimally disturbed. And nearly all new tree plantations are established on abandoned croplands, which are already abundant and accessible. Although the technology of forestry rather than the behavior of hunters spared the forests and stags, Hubert would still be pleased.

7. Sparing pavement

What then are the areas of land that may be built upon? One of the most basic human instincts, from the snake brain, is territorial. Territorial animals strive for territory. Maximizing range means maximizing access to resources. Most of human history is a bloody testimony to the instinct to maximize range. For humans, a large accessible territory means greater liberty in choosing the points of gravity of our lives: the home and the workplace.

Around 1800, new machines began transporting people faster and faster, gobbling up the kilometers and revolutionizing territorial organization.[xi] The highly successful machines are few—train, motor vehicle, and plane—and their diffusion slow. Each has taken from 50 to 100 years to saturate its niche. Each machine progressively stretches the distance traveled daily beyond the 5 km of mobility on foot. Collectively, their outcome is a steady increase in mobility. For example, in France, from 1800 to today, mobility has extended an average of more than 3% per year, doubling about every 25 years. Mobility is constrained by two invariant budgets, one for money and one for time. Humans always spend an average 12-15% of their income for travel. And the snake brain makes us visit our territory for about one hour each day, the travel time budget. Hubert doubtless averaged about one hour of walking per day.

The essence is that the transport system and the number of people basically determine covered land.[xii] Greater wealth enables people to buy higher speed, and when transit quickens, cities spread. Both average wealth and numbers will grow, so cities will take more land.

The USA is a country with a fast growing population, and expects about another 100 million people over the next century. Californians pave or build on about 600 m2 each. At the California rate, the USA increase would consume 6 million hectares, about the combined land area of the Netherlands and Belgium. Globally, if everyone new builds at the present California rate, 4 billion added to today’s 6 billion people would cover about 240 million hectares, midway in size between Mexico and Argentina.

Towering higher, urbanites could spare even more land for nature. In fact, migration from the country to the city formed the long prologue to the Great Restoration. Still, cities will take from nature.

But, to compensate, we can move much of our transit underground, so we need not further tar the landscape. The magnetically levitated train, or maglev, a container without wings, without motors, without combustibles aboard, suspended and propelled by magnetic fields generated in a sort of guard rail, nears readiness (Figure 6). A route from the airport of Shanghai to the city center will soon open. If one puts the maglev underground in a low pressure or vacuum tube, as the Swiss think of doing with their Swissmetro, then we would have the equivalent of a plane that flies at high altitude with few limitations on speed. The Swiss maglev plan links all Swiss cities in 10 minutes.[xiii]

Maglevs in low pressure tubes can be ten times as energy efficient as present transport systems. In fact, they need consume almost no net energy. Had Hubert crossed the USA in 1850 to San Francisco from St. Louis on the Overland Stage, he would have exhausted 2700 fresh horses.

Future human settlements could grow around a maglev station with an area of about 1 km2 and 100,000 inhabitants, be largely pedestrian, and via the maglev form part of a network of city services within walking distance. The quarters could be surrounded by green land. In fact, cities please people, especially those that have grown naturally without suffering the sadism of architects and urban planners.

Technology already holds green mobility in store for us. Naturally maglevs want 100 years to diffuse, like the train, auto, or plane. With maglevs, together with personal vehicles and airplanes operating on hydrogen, Hubert could range hundreds of kilometers daily for his ministry, fulfilling the urges of his reptilian brain, while leaving the land and air pristine.

8. Cardinal Resolutions

How can the Great Restoration of Nature I envision be accomplished? Hubert became only a Bishop, but in his honor, I propose we promote four cardinal resolutions, one each for fish, farms, forests, and transport.

Resolution one: The stakeholders in the oceans, including the scientific community, shall conduct a worldwide Census of Marine Life between now and the year 2010. Some of us already are trying.[xiv] The purpose of the Census is to assess and explain the diversity, distribution, and abundance of marine life. This Census can mark the start of the Great Restoration for marine life, helping us move from uncertain anecdotes to reliable quantities. The Census of Marine Life can provide the impetus and foundation for a vast expansion of marine protected areas and wiser management of life in the sea.

Resolution two: The many partners in the farming enterprise shall continue to lift yields per hectare by 2% per year throughout the 21st century. Science and technology can double and redouble yields and thus spare hundreds of millions of hectares for Nature. We should also be mindful that our diets, that is, behavior, can affect land needed for farming by a factor of two.

Resolution three: Foresters, millers, and consumers shall work together to increase global forest area by 10%, about 300 million hectares, by 2050. Furthermore, we will concentrate logging on about 10% of forest land. Behavior can moderate demand for wood products, and foresters can make trees that speedily meet that demand, minimizing the forest we disturb. Curiously, neither the diplomacy nor science about carbon and greenhouse warming has yet offered a visionary global target or timetable for land use.[xv]

Resolution four: The major cities of the world shall start digging tunnels for maglevs. While cities will sprawl, our transport need not pave paradise or pollute the air. Although our snake brains and the instinct to travel will still determine travel behavior, maglevs can zoom underground, sparing green landscape.

Clearly, to realize our vision we shall need both maglevs and the vision of St. Hubert. Simply promoting the gentle values of St. Hubert is not enough. Soon after he painted his masterpiece, Paulus Potter died of tuberculosis and was buried in Amsterdam on 7 January 1654 at the age of 29. In fact, Potter suffered poor engineering. Observe in The Life of the Hunter that the branch of the tree from which the dogs hang does not bend.

Because we are already more than 6 billion and heading for 10 in the new century, we already have a Faustian bargain with technology. Having come this far with technology, we have no road back. If Indian wheat farmers allow yields to fall to the level of 1960, to sustain the present harvest they would need to clear nearly 50 million hectares, about the area of Madhya Pradesh or Spain.

So, we must engage the elements of human society that impel us toward fish farms, landless agriculture, productive timber, and green mobility. And we must not be fooled into thinking that the talk of politicians and diplomats will achieve our goals. The maglev engineers and farmers and foresters are the authentic movers, aided by science. Still, a helpful step is to lock the vision of the Great Restoration in our minds and make our cardinal resolutions for fish, farms, forests, and transport. In the 21st century, we have both the glowing vision of St. Hubert and the technology exemplified by maglevs to realize the Great Restoration of Nature.

Acknowledgements: Georgia Healey, Cesare Marchetti, Perrin Meyer, David Victor, Iddo Wernick, Paul Waggoner, and especially Diana Wolff-Albers for introducing me to Paulus Potter.

Figures

Figure 1. The Life of the Hunter by Paulus Potter. The painting hangs in the museum of the Hermitage, St. Petersburg.

Figure 2. Symbolic representation of the triune brain. Source: P. D. MacLean, 1990.

Figure 3. World capture fisheries and aquaculture production. Note the rising amount and share of aquaculture. Source: Food and Agriculture Organization of the UN, The state of world fisheries and aquaculture 2000, Rome. https://www.fao.org/DOCREP/003/X8002E/X8002E00.htm

Figure 4. Reversal in area of land used to feed a person. After gradually increasing for centuries, the worldwide area of cropland per person began dropping steeply in about 1950, when yields per hectare began to climb. The square shows the area needed by the Iowa Master Corn Grower of 1999 to supply one person a year’s worth of calories. The dotted line shows how sustaining the lifting of average yields 2 percent per year extends the reversal. Sources of data: Food and Agriculture Organization of the United Nations, various Yearbooks. National Corn Growers Association, National Corngrowers Association Announces 1999 Corn Yield Contest Winners, Hot Off the Cob, St. Louis MO, 15 December 1999; J. F. Richards, 1990, “Land Transformations,” in The Earth as Transformed by Human Action, B. L. Turner II et al. eds., Cambridge University: Cambridge, UK.

Figure 5. Present and projected land use and land cover. Today’s 2.4 billion hectares used for crops and industrial forests spread on “Skinhead Earth” to 2.9 while in the “Great Restoration” they contract to 1.5. Source: D. G. Victor and J. H. Ausubel, Restoring the Forests, Foreign Affairs 79(6): 127-144, 2000.

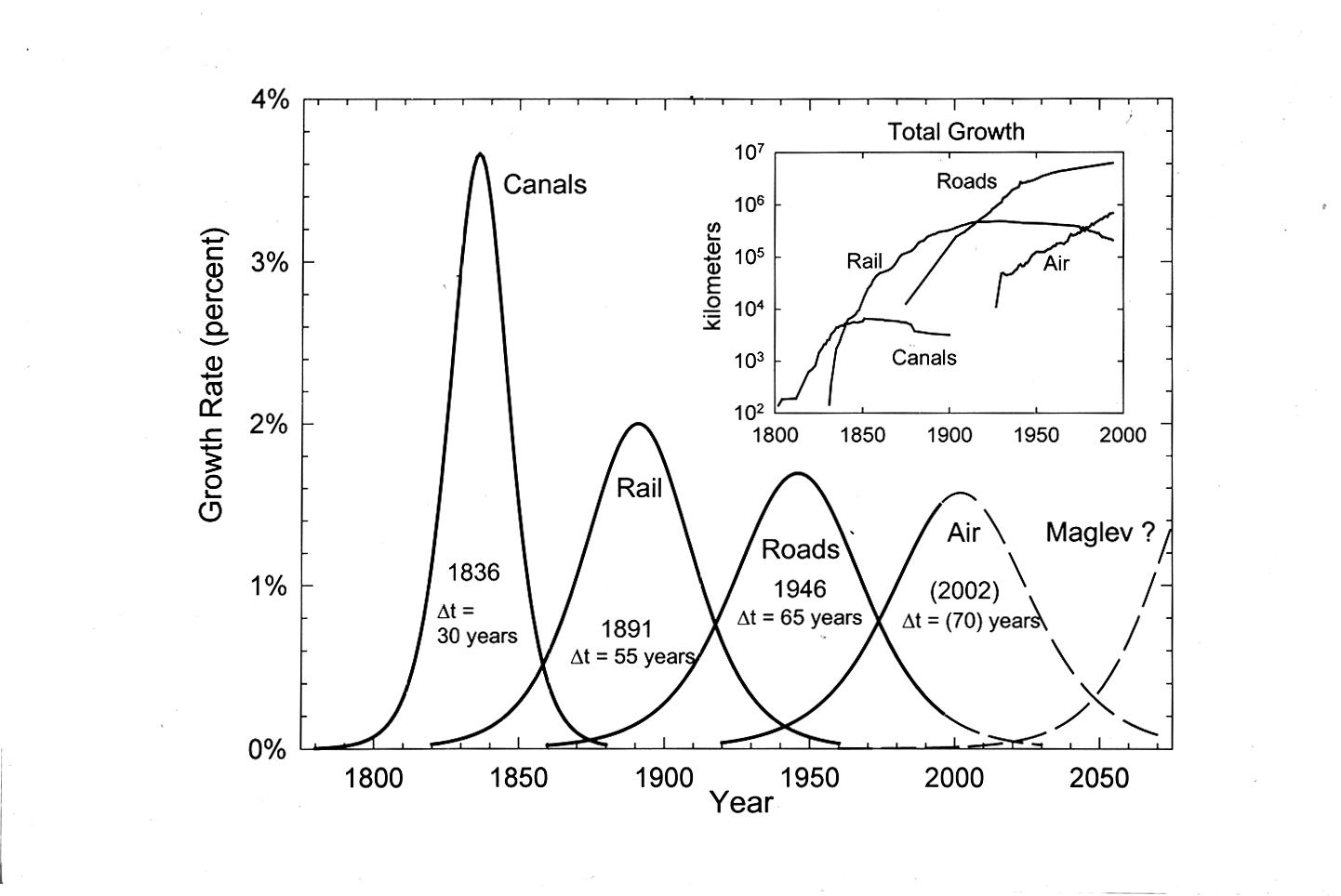

Figure 6. Smoothed historic rates of growth (solid lines) of the major components of the US transport infrastructure and conjectures (dashed lines) based on constant dynamics. Rhythm evokes a new entrant now, maglevs. The inset shows the actual growth, which eventually became negative for canals and rail as routes were closed. Delta t is the time for the system to grow from 10% to 90% of its extent. Source: Toward Green Mobility: The Evolution of Transport, J. H. Ausubel, C. Marchetti, and P. S. Meyer, European Review 6(2): 137-156 (1998).

References and Notes

[i] A. Walsh, E. Buijsen, and B. Broos, Paulus Potter: Schilderijen, tekeningen en etsen, Waanders, Zwolle, 1994.

[ii] The upper right panel shows Diana and Acteon, from the Metamorphosis of the Roman poet Ovid. Acteon, a hunter, was walking in the forest one day after a successful hunt and intruded in a sacred grove where Diana, the virgin goddess, bathed in a pond. Suddenly, in view of Diana, Acteon became inflamed with love for her. He was changed into a deer, from the hunter to what he hunted. As such, he was killed by his own dogs. This panel was painted by a colleague of Potter.

[iii] P. D. MacLean, The Triune Brain in Evolution: Role in Paleocerebral Functions, Plenum, New York, 1990.

[iv] In some fish ranching, notably most of today’s ranching of salmon, the salmon effectively graze the oceans, as the razorback hogs of a primitive farmer would graze the oak woods. Such aquaculture consists of catching wild “junk” fish or their oil to feed to our herds, such as salmon in pens. We change the form of the fish, adding economic value, but do not address the fundamental question of the tons of stocks. A shift from this ocean ranching and grazing to true farming of parts of the ocean can spare others from the present, on-going depletion.

[v] J. H. Ausubel, The Great Reversal: Nature’s Chance to Restore Land and Sea, Technology in Society 22(3):289-302, 2000; M. Markels, Jr., Method of improving production of seafood. US Patent 5,433,173, July 18, 1995, Washington DC.

[vi] Along with its iron supplement, such an ocean farm would annually require about 4 million tons of nitrogen fertilizer, 1/20th of the synthetic fertilizers used by all land farms.

[vii] P. E. Waggoner and J. H. Ausubel, How Much Will Feeding More and Wealthier People Encroach on Nature? Population and Development Review 27(2):239-257, 200.

[viii] G. Leach, Energy and Food Production, IPC Science and Technology Press, Guildford UK, 1976, quantifies the energy costs of a range of food systems.

[ix] I. K. Wernick, P. E. Waggoner, and J. H. Ausubel, Searching for Leverage to Conserve Forests: The Industrial Ecology of Wood Products in the U.S., Journal of Industrial Ecology 1(3):125-145, 1997.

[x] In the United States, for example, leftovers from lumber mills account for more than a third of the wood chips turned into pulp and paper; what is still left after that is burned for power.

[xi] J. H. Ausubel, C. Marchetti, and P. S. Meyer, Toward Green Mobility: The Evolution of Transport, European Review 6(2):143-162, 1998.

[xii] P. E. Waggoner, J. H. Ausubel, I. K. Wernick, Lightening the Tread of Population on the Land: American Examples, Population and Development Review 22(3):531-545, 1996.

[xiii] www.swissmetro.com

[xiv] J. H. Ausubel, The Census of Marine Life: Progress and Prospects, Fisheries 26 (7): 33-36, 2001.

[xv] D. G. Victor and J. H. Ausubel, Restoring the Forests, Foreign Affairs 79(6): 127-144, 2000.

The Evolution of Transport

This paper appeared in the April/May 2001 issue of the magazine The Industrial Physicist, on pages 20-24.

See also:

Toward Green Mobility, the Evolution of Transport, which appeared in the journal The European Review, Vol. 6, No. 2, 137-156 (1998).

Toward Green Mobility: The Evolution of Transport

Summary:

We envision a transport system producing zero emissions and sparing the surface landscape, while people on average range hundreds of kilometers daily. We believe this prospect of ‘green mobility’ is consistent in general principles with historical evolution. We lay out these general principles, extracted from widespread observations of human behavior over long periods, and use them to explain past transport and to project the next 50 to 100 years. Our picture emphasizes the slow penetration of new technologies of transport adding speed in the course of substituting for the old ones in terms of time allocation. We discuss serially and in increasing detail railroads, cars, aeroplanes, and magnetically levitated trains (maglevs).

Introduction

Transport matters for the human environment. Its performance characteristics shape settlement patterns. Its infrastructures transform the landscape. It consumes about one-third of all energy in a country such as the United States. And transport emissions strongly influence air quality. Thus, people naturally wonder whether we have a chance for ‘green mobility’, transport systems embedded in the environment so as to impose minimal disturbance.

In this paper we explore the prospect for green mobility. To this end, we have sought to construct a self-consistent picture of mobility in terms of general laws extracted from widespread observations of human behavior over long periods. Here we describe this picture and use the principles to project the likely evolution of the transport system over the next 50 to 100 years.

Our analyses deal mostly with averages. As often emphasized, many vexing problems of transport systems stem from the qualities of distributions, which cause traffic jams as well as costly empty infrastructures. 1 Subsequent elaboration of the system we foresee might address its robustness in light of fluctuations of various kinds. Although the United States provides most illustrations, the principles apply to all populations and could be used to explain the past and project the future wherever data suffice.

General travel laws and early history

Understanding mobility begins with the biological: humans are territorial animals and instinctively try to maximize territory. 2,3,4The reason is that territory equates with opportunities and resources.

However, there are constraints to range — essentially, time and money. In this regard, we subscribe to the fundamental insights on regularities in household travel patterns and their relationships gained by Zahavi and associates in studies for the World Bank and the US Department of Transportation in the 1970s and early 1980s. 5,6,7,8

According to Zahavi, since ever and in contemporary societies spanning the full range of economic development, people average about 1 hour per day traveling. This is the travel time budget. Schafer and Victor, who surveyed many travel time studies in the decade subsequent to Zahavi, find the budget continues to hover around one hour. 9 Figure 1 shows representative data for studies of the United States, the state of California, and sites in about a dozen other countries since 1965. We take special note of three careful studies done for the city of Tokyo as well as one averaging 131 Japanese cities. 10 Although Tokyo is often mentioned as a place where people commute for many hours daily, the travel time budget proves to be about 70 minutes, and the Japanese urban average is exactly one hour. Switzerland, generally a source of reliable data, shows a 70 minute travel time budget. 11

Figure 1 . Travel time budgets measured in minutes of travel per person per day, sample of studies. Sources of data: Katiyar and Ohta 10, Ofreuil and Salomon8,, Szalai et al. 14, US Department of Transportation 33,34, Wiley et al .12,, Balzer 13. Other data compiled from diverse sources By Schafer and Victor 9.

The only high outlier we have found comes from a study of 1987-1988 activity patterns of Californians, who reported in diaries and phone surveys that they averaged 109 minutes per day travelling. 12 The survey excluded children under age 11 and may also reflect that Californians eat, bank, and conduct other activities in their cars. If this value signaled a lasting change in lifestyle to more travel rather than bias in self-reporting or the factors just mentioned, it would be significant. But, a study during 1994 of 3,000 Americans, chosen to reflect the national population, including people aged 18-90 in all parts of the country and economic classes, yielded transit time of only 52 minutes. 13 After California, the next highest value we found in the literature is 90 minutes in Lima, where Peruvians travel from shantytowns to work and markets in half-broken buses.

We will assume for the duration of this paper that one hour of daily travel is the appropriate reference point in mobility studies for considering full populations over extended periods. Variations around this time likely owe to diverse survey methods and coverage, for example, in including walking or excluding weekends, or to local fluctuations. 14

Why 1 hour more or less for travel? Perhaps a basic instinct about risk sets this budget. Travel is exposure and thus risky as well as rewarding. MacLean reports evolutionary stability in the parts of the brain that determine daily routine in animals from the human back to the lizard, which emerges slowly and cautiously in the morning, forages locally, later forages farther afield, returns to the shelter area, and finally retires. 15 Human accident rates measured against time also exhibit homeostasis. 16

The fraction of income as well as time that people spend on travel remains narrowly bounded. The travel money budget fluctuates between about 11% and 15% of personal disposable income (Table 1).

Table 1. Travel expenditures, percent of disposable income, various studies. Sources Of Data: Eurostat 40, UK Department of Transport 41, Schafer and Victor 9, Central Statistics Office 42, US Bureau of the Census 21,28, Zahavi 5; Institut National De La Statistique et des Etudes Economiques 43.

| Country | Year | Percent of Income Spent on Travel |

|---|---|---|

| United States | 1963-1975 | 13.2 |

| 1980 | 13.5 | |

| 1990 | 12.1 | |

| 1994 | 11.4 | |

| United Kingdom | 1972 | 11.7 |

| 1991 | 15.0 | |

| 1994 | 15.6 | |

| West Germany | 1971-1974 | 11.3 |

| 1991 | 14.0 | |

| France | 1970 | 14.0 |

| 1991 | 14.8 | |

| 1995 | 14.5 |

The constant time and money budgets permit the interpretation of much of the history of movement. Their implication is that speed, low-cost speed, is the goal of transport systems. People allocate time and money to maximize distance, that is, territory. In turn when people gain speed, they travel farther, rather than make more trips.